An Architect Is designing a data lake with Snowflake. The company has structured, semi-structured, and unstructured data. The company wants to save the data inside the data lake within the Snowflake system. The company is planning on sharing data among Its corporate branches using Snowflake data sharing.

What should be considered when sharing the unstructured data within Snowflake?

A media company needs a data pipeline that will ingest customer review data into a Snowflake table, and apply some transformations. The company also needs to use Amazon Comprehend to do sentiment analysis and make the de-identified final data set available publicly for advertising companies who use different cloud providers in different regions.

The data pipeline needs to run continuously and efficiently as new records arrive in the object storage leveraging event notifications. Also, the operational complexity, maintenance of the infrastructure, including platform upgrades and security, and the development effort should be minimal.

Which design will meet these requirements?

A user, analyst_user has been granted the analyst_role, and is deploying a SnowSQL script to run as a background service to extract data from Snowflake.

What steps should be taken to allow the IP addresses to be accessed? (Select TWO).

Which SQL ALTER command will MAXIMIZE memory and compute resources for a Snowpark stored procedure when executed on the snowpark_opt_wh warehouse?

Is it possible for a data provider account with a Snowflake Business Critical edition to share data with an Enterprise edition data consumer account?

A healthcare company wants to share data with a medical institute. The institute is running a Standard edition of Snowflake; the healthcare company is running a Business Critical edition.

How can this data be shared?

User1 and User2 are new users that were granted different functional roles.

User1 was granted the IT_ANALYST_ROLE

User2 was granted the FIN_ANALYST_ROLE

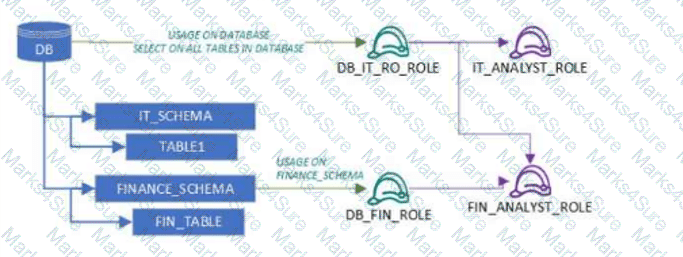

Review the following security design (as shown in the diagram):

A database (DB) grants USAGE and SELECT on all tables to DB_IT_RO_ROLE

DB_IT_RO_ROLE is granted to IT_ANALYST_ROLE

IT_SCHEMA contains TABLE1

FINANCE_SCHEMA grants USAGE and SELECT to DB_FIN_ROLE

DB_FIN_ROLE is granted to FIN_ANALYST_ROLE

FINANCE_SCHEMA contains FIN_TABLE

Which tables can each user read?

Data is being imported and stored as JSON in a VARIANT column. Query performance was fine, but most recently, poor query performance has been reported.

What could be causing this?

An Architect is designing Snowflake architecture to support fast Data Analyst reporting. To optimize costs, the virtual warehouse is configured to auto-suspend after 2 minutes of idle time. Queries are run once in the morning after refresh, but later queries run slowly.

Why is this occurring?

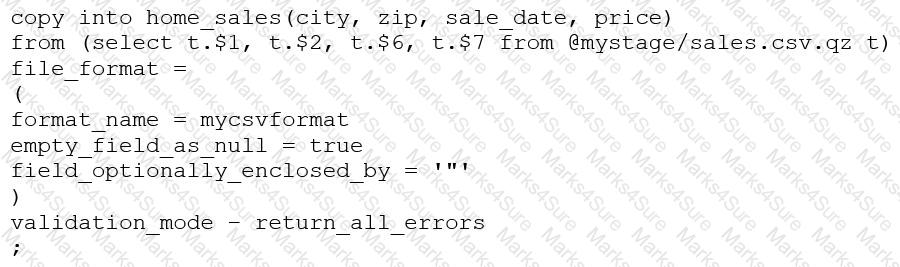

Consider the following COPY command which is loading data with CSV format into a Snowflake table from an internal stage through a data transformation query.

This command results in the following error:

SQL compilation error: invalid parameter 'validation_mode'

Assuming the syntax is correct, what is the cause of this error?

An Architect is integrating an application that needs to read and write data to Snowflake without installing any additional software on the application server.

How can this requirement be met?

A company has a Snowflake environment running in AWS us-west-2 (Oregon). The company needs to share data privately with a customer who is running their Snowflake environment in Azure East US 2 (Virginia).

What is the recommended sequence of operations that must be followed to meet this requirement?

Role A has the following permissions:

. USAGE on db1

. USAGE and CREATE VIEW on schemal in db1

. SELECT on tablel in schemal

Role B has the following permissions:

. USAGE on db2

. USAGE and CREATE VIEW on schema2 in db2

. SELECT on table2 in schema2

A user has Role A set as the primary role and Role B as a secondary role.

What command will fail for this user?

A company is designing high availability and disaster recovery plans and needs to maximize redundancy and minimize recovery time objectives for their critical application processes. Cost is not a concern as long as the solution is the best available. The plan so far consists of the following steps:

1. Deployment of Snowflake accounts on two different cloud providers.

2. Selection of cloud provider regions that are geographically far apart.

3. The Snowflake deployment will replicate the databases and account data between both cloud provider accounts.

4. Implementation of Snowflake client redirect.

What is the MOST cost-effective way to provide the HIGHEST uptime and LEAST application disruption if there is a service event?

Which organization-related tasks can be performed by the ORGADMIN role? (Choose three.)

A company wants to configure the Client Redirect feature on their Snowflake account to ensure business continuity during failover events.

How should an Architect accomplish this?

The following table exists in the production database:

A regulatory requirement states that the company must mask the username for events that are older than six months based on the current date when the data is queried.

How can the requirement be met without duplicating the event data and making sure it is applied when creating views using the table or cloning the table?

Which of the following ingestion methods can be used to load near real-time data by using the messaging services provided by a cloud provider?

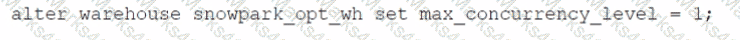

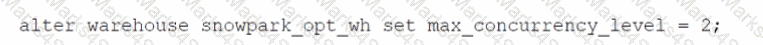

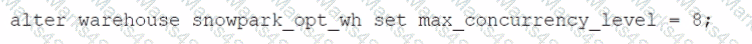

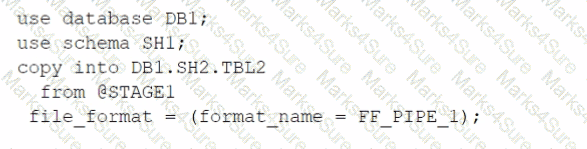

Which SQL alter command will MAXIMIZE memory and compute resources for a Snowpark stored procedure when executed on the snowpark_opt_wh warehouse?

A)

B)

C)

D)

How can the Snowflake context functions be used to help determine whether a user is authorized to see data that has column-level security enforced? (Select TWO).

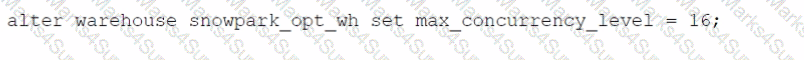

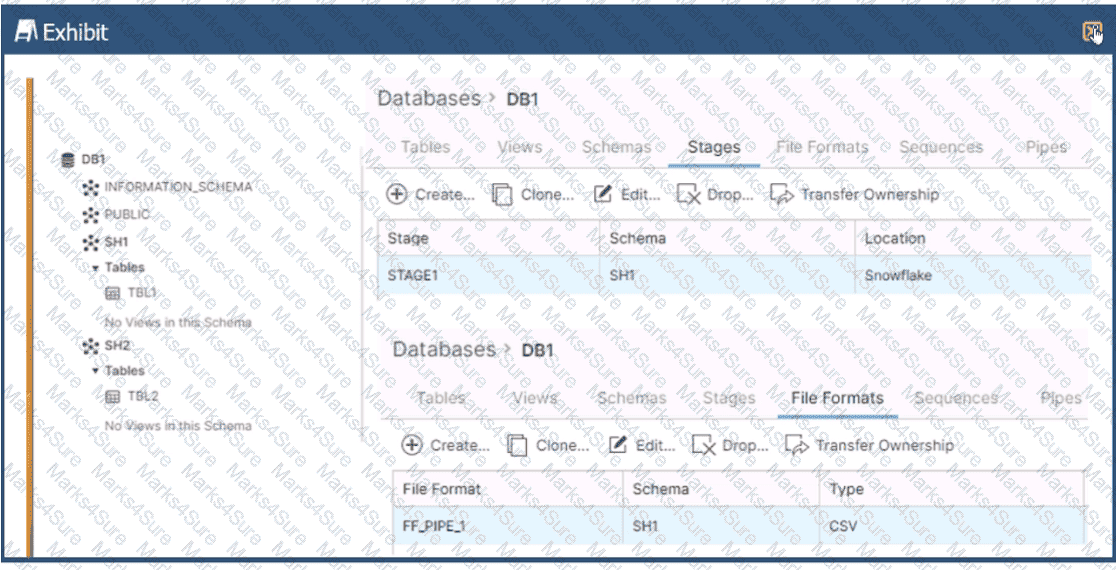

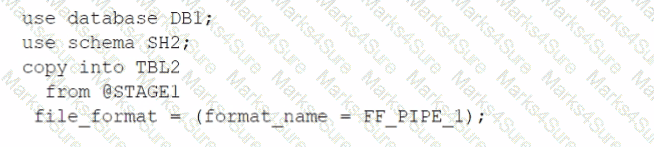

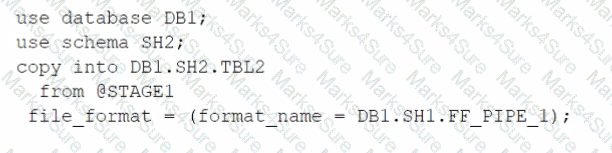

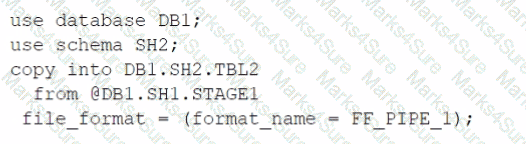

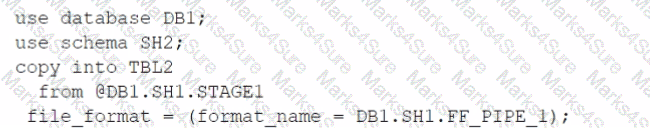

Based on the architecture in the image, how can the data from DB1 be copied into TBL2? (Select TWO).

A)

B)

C)

D)

E)

An Architect is designing an architecture that must meet the following requirements:

Use Tri-Secret Secure

Share some information stored in a view with another Snowflake customer

Hide portions of sensitive information in columns

Use zero-copy cloning to refresh non-production from production

Which design elements must be implemented? (Select THREE).

A company has a Snowflake account named ACCOUNTA in AWS us-east-1 region. The company stores its marketing data in a Snowflake database named MARKET_DB. One of the company’s business partners has an account named PARTNERB in Azure East US 2 region. For marketing purposes the company has agreed to share the database MARKET_DB with the partner account.

Which of the following steps MUST be performed for the account PARTNERB to consume data from the MARKET_DB database?

The Data Engineering team at a large manufacturing company needs to engineer data coming from many sources to support a wide variety of use cases and data consumer requirements which include:

1) Finance and Vendor Management team members who require reporting and visualization

2) Data Science team members who require access to raw data for ML model development

3) Sales team members who require engineered and protected data for data monetization

What Snowflake data modeling approaches will meet these requirements? (Choose two.)

A company is using Snowflake in Azure in the Netherlands. The company analyst team also has data in JSON format that is stored in an Amazon S3 bucket in the AWS Singapore region that the team wants to analyze.

The Architect has been given the following requirements:

1. Provide access to frequently changing data

2. Keep egress costs to a minimum

3. Maintain low latency

How can these requirements be met with the LEAST amount of operational overhead?

In a managed access schema, what are characteristics of the roles that can manage object privileges? (Select TWO).

A company has several sites in different regions from which the company wants to ingest data.

Which of the following will enable this type of data ingestion?

Files arrive in an external stage every 10 seconds from a proprietary system. The files range in size from 500 K to 3 MB. The data must be accessible by dashboards as soon as it arrives.

How can a Snowflake Architect meet this requirement with the LEAST amount of coding? (Choose two.)

When loading data into a table that captures the load time in a column with a default value of either CURRENT_TIME () or CURRENT_TIMESTAMP() what will occur?

What Snowflake system functions are used to view and or monitor the clustering metadata for a table? (Select TWO).

A data platform team creates two multi-cluster virtual warehouses with the AUTO_SUSPEND value set to NULL on one. and '0' on the other. What would be the execution behavior of these virtual warehouses?

An Architect is designing a solution that will be used to process changed records in an orders table. Newly-inserted orders must be loaded into the f_orders fact table, which will aggregate all the orders by multiple dimensions (time, region, channel, etc.). Existing orders can be updated by the sales department within 30 days after the order creation. In case of an order update, the solution must perform two actions:

1. Update the order in the f_0RDERS fact table.

2. Load the changed order data into the special table ORDER _REPAIRS.

This table is used by the Accounting department once a month. If the order has been changed, the Accounting team needs to know the latest details and perform the necessary actions based on the data in the order_repairs table.

What data processing logic design will be the MOST performant?

When using the Snowflake Connector for Kafka, what data formats are supported for the messages? (Choose two.)

Company A would like to share data in Snowflake with Company B. Company B is not on the same cloud platform as Company A.

What is required to allow data sharing between these two companies?

A company needs to share its product catalog data with one of its partners. The product catalog data is stored in two database tables: product_category, and product_details. Both tables can be joined by the product_id column. Data access should be governed, and only the partner should have access to the records.

The partner is not a Snowflake customer. The partner uses Amazon S3 for cloud storage.

Which design will be the MOST cost-effective and secure, while using the required Snowflake features?

A group of order_admin users must delete records older than 5 years from an orders table without having DELETE privileges. The order_manager role has DELETE privileges.

How can this be achieved?

An Architect is designing a file ingestion recovery solution. The project will use an internal named stage for file storage. Currently, in the case of an ingestion failure, the Operations team must manually download the failed file and check for errors.

Which downloading method should the Architect recommend that requires the LEAST amount of operational overhead?

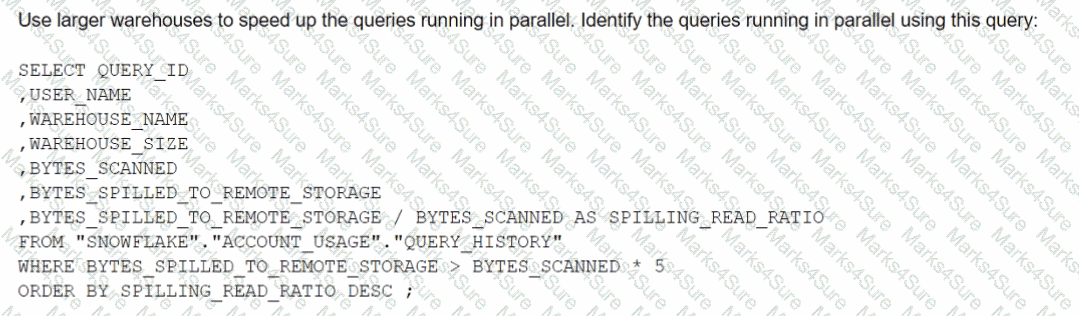

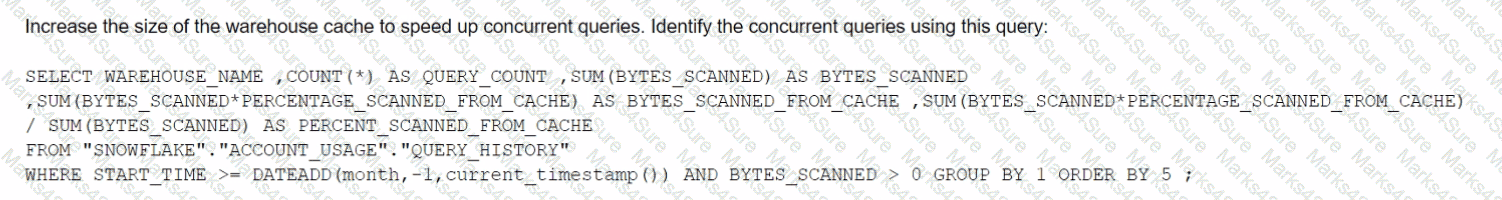

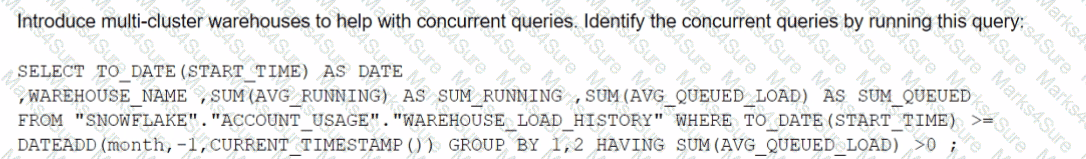

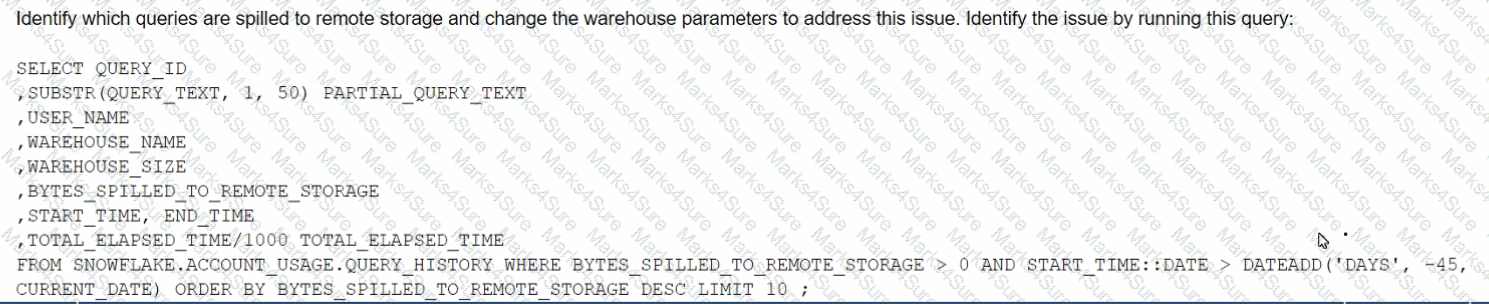

The Business Intelligence team reports that when some team members run queries for their dashboards in parallel with others, the query response time is getting significantly slower What can a Snowflake Architect do to identify what is occurring and troubleshoot this issue?

A)

B)

C)

D)

An Architect needs to design a data unloading strategy for Snowflake, that will be used with the COPY INTO <location> command.

Which configuration is valid?

Why might a Snowflake Architect use a star schema model rather than a 3NF model when designing a data architecture to run in Snowflake? (Select TWO).

A company is storing large numbers of small JSON files (ranging from 1-4 bytes) that are received from IoT devices and sent to a cloud provider. In any given hour, 100,000 files are added to the cloud provider.

What is the MOST cost-effective way to bring this data into a Snowflake table?

An Architect for a multi-national transportation company has a system that is used to check the weather conditions along vehicle routes. The data is provided to drivers.

The weather information is delivered regularly by a third-party company and this information is generated as JSON structure. Then the data is loaded into Snowflake in a column with a VARIANT data type. This

table is directly queried to deliver the statistics to the drivers with minimum time lapse.

A single entry includes (but is not limited to):

- Weather condition; cloudy, sunny, rainy, etc.

- Degree

- Longitude and latitude

- Timeframe

- Location address

- Wind

The table holds more than 10 years' worth of data in order to deliver the statistics from different years and locations. The amount of data on the table increases every day.

The drivers report that they are not receiving the weather statistics for their locations in time.

What can the Architect do to deliver the statistics to the drivers faster?

An event table has 150B rows and 1.5M micro-partitions, with the following statistics:

Column NDV*

A_ID 11K

C_DATE 110

NAME 300K

EVENT_ACT_0 1.1G

EVENT_ACT_4 2.2G

*NDV = Number of Distinct Values

What three clustering keys should be used, in order?

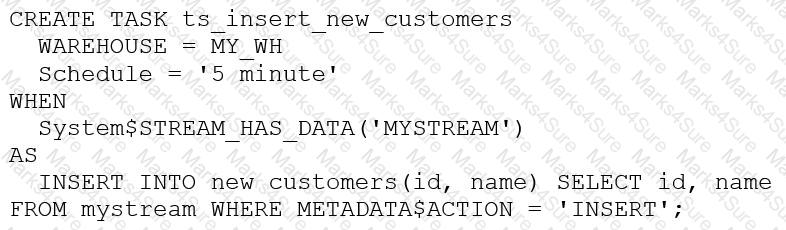

The following DDL command was used to create a task based on a stream:

Assuming MY_WH is set to auto_suspend – 60 and used exclusively for this task, which statement is true?

An Architect needs to automate the daily Import of two files from an external stage into Snowflake. One file has Parquet-formatted data, the other has CSV-formatted data.

How should the data be joined and aggregated to produce a final result set?