Which two statements are correct when assigning partitions to the consumers in a consumer group using the assign() API?

(Select two.)

(A consumer application needs to use an at-most-once delivery semantic.

What is the best consumer configuration and code skeleton to avoid duplicate messages being read?)

You use Kafka Connect with the JDBC source connector to extract data from a large database and push it into Kafka.

The database contains tens of tables, and the current connector is unable to process the data fast enough.

You add more Kafka Connect workers, but throughput doesn't improve.

What should you do next?

What is a consequence of increasing the number of partitions in an existing Kafka topic?

You are building a system for a retail store selling products to customers.

Which three datasets should you model as a GlobalKTable?

(Select three.)

You have a topic with four partitions. The application reads from it using two consumers in a single consumer group.

Processing is CPU-bound, and lag is increasing.

What should you do?

Your Kafka cluster has five brokers. The topic t1 on the cluster has:

Two partitions

Replication factor = 4

min.insync.replicas = 3You need strong durability guarantees for messages written to topic t1.You configure a producer acks=all and all the replicas for t1 are in-sync.How many brokers need to acknowledge a message before it is considered committed?

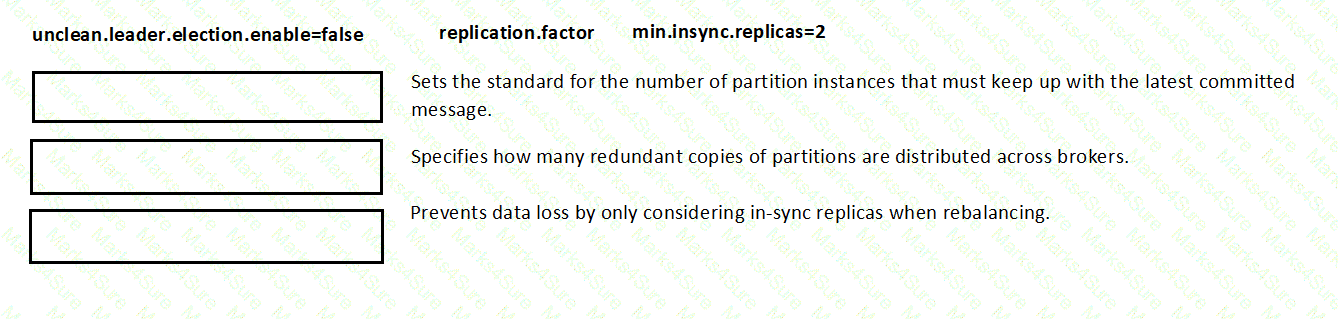

Match the topic configuration setting with the reason the setting affects topic durability.

(You are given settings like unclean.leader.election.enable=false, replication.factor, min.insync.replicas=2)

You are developing a Java application using a Kafka consumer.

You need to integrate Kafka’s client logs with your own application’s logs using log4j2.

Which Java library dependency must you include in your project?

What is the default maximum size of a message the Apache Kafka broker can accept?

(You create an Orders topic with 10 partitions.

The topic receives data at high velocity.

Your Kafka Streams application initially runs on a server with four CPU threads.

You move the application to another server with 10 CPU threads to improve performance.

What does this example describe?)

You create a topic named loT-Data with 10 partitions and replication factor of three.

A producer sends 1 MB messages compressed with Gzip.

Which two statements are true in this scenario?

(Select two.)

(You are configuring a source connector that writes records to an Orders topic.

You need to send some of the records to a different topic.

Which Single Message Transform (SMT) is best suited for this requirement?)

You have a Kafka Connect cluster with multiple connectors.

One connector is not working as expected.

How can you find logs related to that specific connector?

You are writing to a topic with acks=all.

The producer receives acknowledgments but you notice duplicate messages.

You find that timeouts due to network delay are causing resends.

Which configuration should you use to prevent duplicates?

(You are writing lightweight XML messages to a Kafka topic named userinfo.

Which format should you use for the value field?)

You create a producer that writes messages about bank account transactions from tens of thousands of different customers into a topic.

Your consumers must process these messages with low latency and minimize consumer lag

Processing takes ~6x longer than producing

Transactions for each bank account must be processed in orderWhich strategy should you use?

(You deploy a Kafka Streams application with five application instances.

Kafka Streams stores application metadata using internal topics.

Auto-topic creation is disabled in the Kafka cluster.

Which statement about this scenario is true?)

(A stream processing application tracks user activity in online shopping carts, including items added, removed, and ordered throughout the day for each user.

You need to capture data to identify possible periods of user inactivity.

Which type of Kafka Streams window should you use?)

Your application is consuming from a topic configured with a deserializer.

It needs to be resilient to badly formatted records ("poison pills"). You surround the poll() call with a try/catch for RecordDeserializationException.

You need to log the bad record, skip it, and continue processing.

Which action should you take in the catch block?

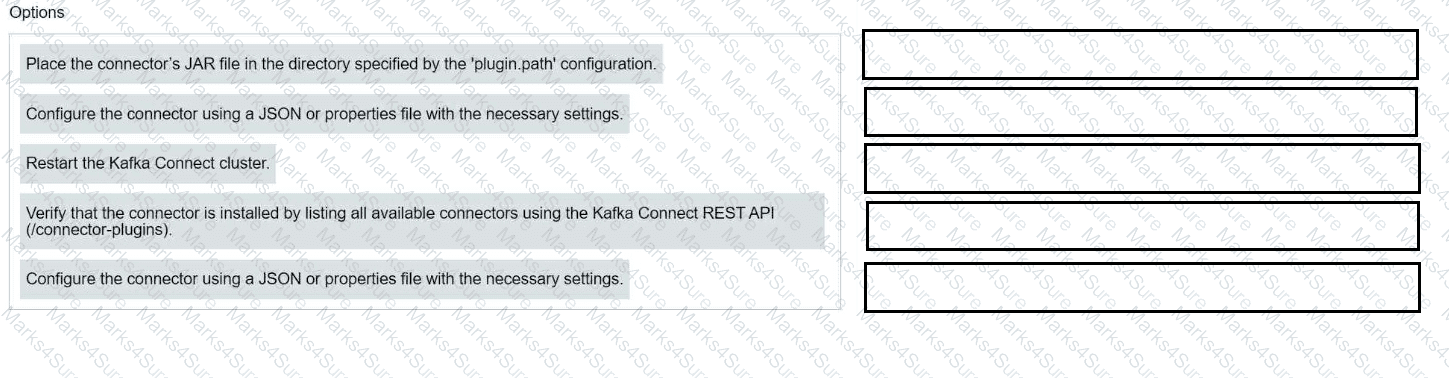

Match each configuration parameter with the correct deployment step in installing a Kafka connector.