11 of 55.

Which Spark configuration controls the number of tasks that can run in parallel on an executor?

A data engineer is streaming data from Kafka and requires:

Minimal latency

Exactly-once processing guarantees

Which trigger mode should be used?

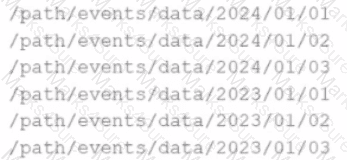

A data engineer is asked to build an ingestion pipeline for a set of Parquet files delivered by an upstream team on a nightly basis. The data is stored in a directory structure with a base path of "/path/events/data". The upstream team drops daily data into the underlying subdirectories following the convention year/month/day.

A few examples of the directory structure are:

Which of the following code snippets will read all the data within the directory structure?

An engineer wants to join two DataFrames df1 and df2 on the respective employee_id and emp_id columns:

df1: employee_id INT, name STRING

df2: emp_id INT, department STRING

The engineer uses:

result = df1.join(df2, df1.employee_id == df2.emp_id, how='inner')

What is the behaviour of the code snippet?

A Data Analyst needs to retrieve employees with 5 or more years of tenure.

Which code snippet filters and shows the list?

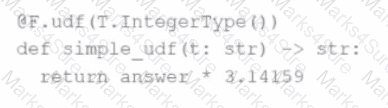

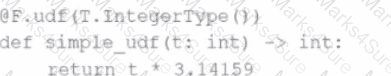

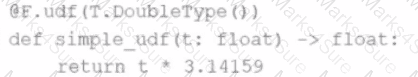

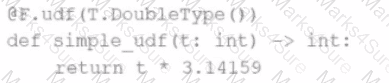

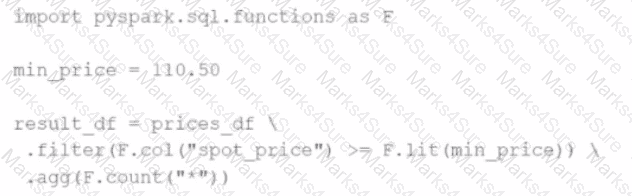

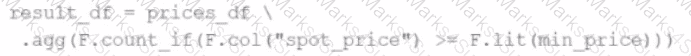

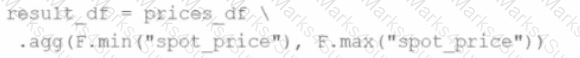

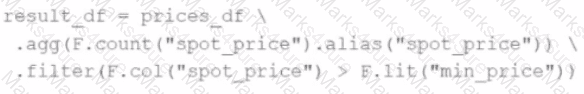

The following code fragment results in an error:

Which code fragment should be used instead?

A)

B)

C)

D)

A data analyst wants to add a column date derived from a timestamp column.

Options:

A data scientist is working on a project that requires processing large amounts of structured data, performing SQL queries, and applying machine learning algorithms. The data scientist is considering using Apache Spark for this task.

Which combination of Apache Spark modules should the data scientist use in this scenario?

Options:

44 of 55.

A data engineer is working on a real-time analytics pipeline using Spark Structured Streaming.

They want the system to process incoming data in micro-batches at a fixed interval of 5 seconds.

Which code snippet fulfills this requirement?

30 of 55.

A data engineer is working on a num_df DataFrame and has a Python UDF defined as:

def cube_func(val):

return val * val * val

Which code fragment registers and uses this UDF as a Spark SQL function to work with the DataFrame num_df?

A developer notices that all the post-shuffle partitions in a dataset are smaller than the value set for spark.sql.adaptive.maxShuffledHashJoinLocalMapThreshold.

Which type of join will Adaptive Query Execution (AQE) choose in this case?

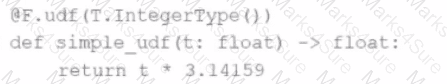

Given this code:

.withWatermark("event_time", "10 minutes")

.groupBy(window("event_time", "15 minutes"))

.count()

What happens to data that arrives after the watermark threshold?

Options:

Which Spark configuration controls the number of tasks that can run in parallel on the executor?

Options:

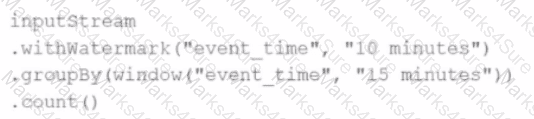

A developer needs to produce a Python dictionary using data stored in a small Parquet table, which looks like this:

The resulting Python dictionary must contain a mapping of region -> region id containing the smallest 3 region_id values.

Which code fragment meets the requirements?

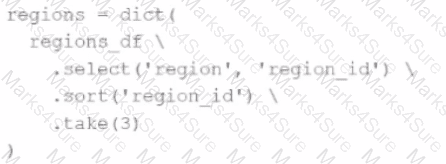

A)

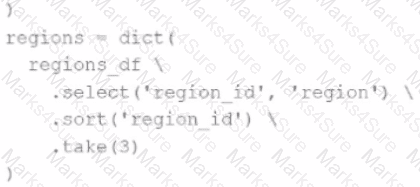

B)

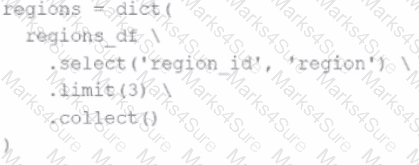

C)

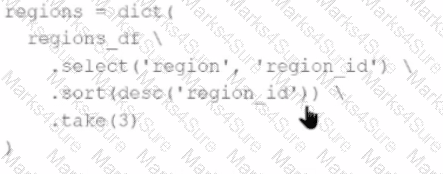

D)

The resulting Python dictionary must contain a mapping of region -> region_id for the smallest 3 region_id values.

Which code fragment meets the requirements?

47 of 55.

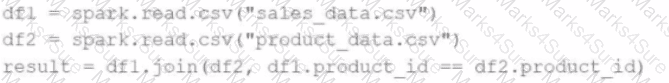

A data engineer has written the following code to join two DataFrames df1 and df2:

df1 = spark.read.csv("sales_data.csv")

df2 = spark.read.csv("product_data.csv")

df_joined = df1.join(df2, df1.product_id == df2.product_id)

The DataFrame df1 contains ~10 GB of sales data, and df2 contains ~8 MB of product data.

Which join strategy will Spark use?

18 of 55.

An engineer has two DataFrames — df1 (small) and df2 (large). To optimize the join, the engineer uses a broadcast join:

from pyspark.sql.functions import broadcast

df_result = df2.join(broadcast(df1), on="id", how="inner")

What is the purpose of using broadcast() in this scenario?

A developer is running Spark SQL queries and notices underutilization of resources. Executors are idle, and the number of tasks per stage is low.

What should the developer do to improve cluster utilization?

A data engineer writes the following code to join two DataFrames df1 and df2:

df1 = spark.read.csv("sales_data.csv") # ~10 GB

df2 = spark.read.csv("product_data.csv") # ~8 MB

result = df1.join(df2, df1.product_id == df2.product_id)

Which join strategy will Spark use?

Which UDF implementation calculates the length of strings in a Spark DataFrame?

An engineer has a large ORC file located at /file/test_data.orc and wants to read only specific columns to reduce memory usage.

Which code fragment will select the columns, i.e., col1, col2, during the reading process?

A data scientist is working with a Spark DataFrame called customerDF that contains customer information. The DataFrame has a column named email with customer email addresses. The data scientist needs to split this column into username and domain parts.

Which code snippet splits the email column into username and domain columns?

A data scientist is working on a large dataset in Apache Spark using PySpark. The data scientist has a DataFrame df with columns user_id, product_id, and purchase_amount and needs to perform some operations on this data efficiently.

Which sequence of operations results in transformations that require a shuffle followed by transformations that do not?

A data scientist at a financial services company is working with a Spark DataFrame containing transaction records. The DataFrame has millions of rows and includes columns for transaction_id, account_number, transaction_amount, and timestamp. Due to an issue with the source system, some transactions were accidentally recorded multiple times with identical information across all fields. The data scientist needs to remove rows with duplicates across all fields to ensure accurate financial reporting.

Which approach should the data scientist use to deduplicate the orders using PySpark?

A developer wants to test Spark Connect with an existing Spark application.

What are the two alternative ways the developer can start a local Spark Connect server without changing their existing application code? (Choose 2 answers)

5 of 55.

What is the relationship between jobs, stages, and tasks during execution in Apache Spark?

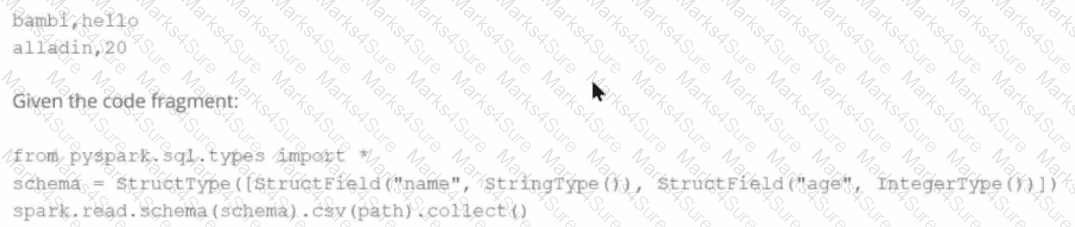

Given a CSV file with the content:

And the following code:

from pyspark.sql.types import *

schema = StructType([

StructField("name", StringType()),

StructField("age", IntegerType())

])

spark.read.schema(schema).csv(path).collect()

What is the resulting output?

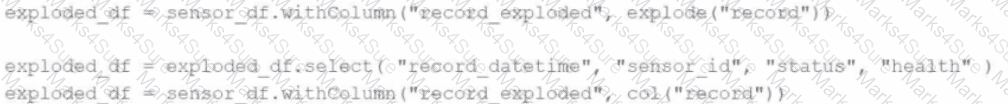

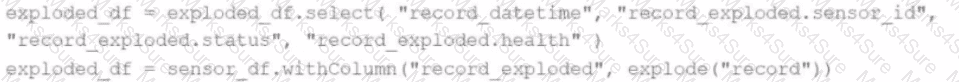

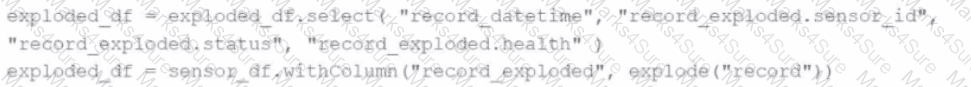

A Data Analyst is working on the DataFrame sensor_df, which contains two columns:

Which code fragment returns a DataFrame that splits the record column into separate columns and has one array item per row?

A)

B)

C)

D)

A developer wants to refactor some older Spark code to leverage built-in functions introduced in Spark 3.5.0. The existing code performs array manipulations manually. Which of the following code snippets utilizes new built-in functions in Spark 3.5.0 for array operations?

A)

B)

C)

D)

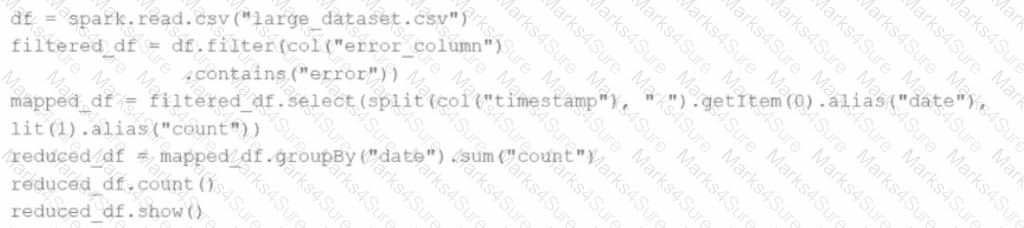

Given the code:

df = spark.read.csv("large_dataset.csv")

filtered_df = df.filter(col("error_column").contains("error"))

mapped_df = filtered_df.select(split(col("timestamp"), " ").getItem(0).alias("date"), lit(1).alias("count"))

reduced_df = mapped_df.groupBy("date").sum("count")

reduced_df.count()

reduced_df.show()

At which point will Spark actually begin processing the data?

A data engineer is running a batch processing job on a Spark cluster with the following configuration:

10 worker nodes

16 CPU cores per worker node

64 GB RAM per node

The data engineer wants to allocate four executors per node, each executor using four cores.

What is the total number of CPU cores used by the application?

20 of 55.

What is the difference between df.cache() and df.persist() in Spark DataFrame?

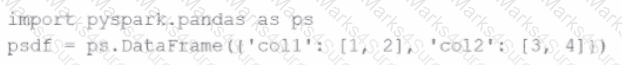

Given the code fragment:

import pyspark.pandas as ps

psdf = ps.DataFrame({'col1': [1, 2], 'col2': [3, 4]})

Which method is used to convert a Pandas API on Spark DataFrame (pyspark.pandas.DataFrame) into a standard PySpark DataFrame (pyspark.sql.DataFrame)?

27 of 55.

A data engineer needs to add all the rows from one table to all the rows from another, but not all the columns in the first table exist in the second table.

The error message is:

AnalysisException: UNION can only be performed on tables with the same number of columns.

The existing code is:

au_df.union(nz_df)

The DataFrame au_df has one extra column that does not exist in the DataFrame nz_df, but otherwise both DataFrames have the same column names and data types.

What should the data engineer fix in the code to ensure the combined DataFrame can be produced as expected?

A DataFrame df has columns name, age, and salary. The developer needs to sort the DataFrame by age in ascending order and salary in descending order.

Which code snippet meets the requirement of the developer?

3 of 55. A data engineer observes that the upstream streaming source feeds the event table frequently and sends duplicate records. Upon analyzing the current production table, the data engineer found that the time difference in the event_timestamp column of the duplicate records is, at most, 30 minutes.

To remove the duplicates, the engineer adds the code:

df = df.withWatermark("event_timestamp", "30 minutes")

What is the result?