You to need assign permissions for the data store in the AnalyticsPOC workspace. The solution must meet the security requirements.

Which additional permissions should you assign when you share the data store? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

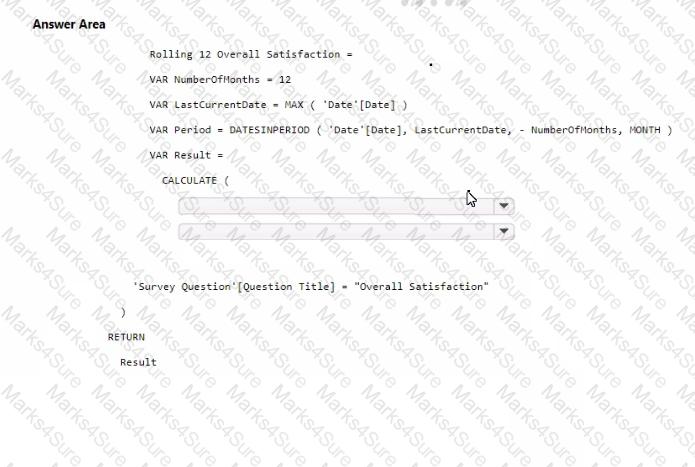

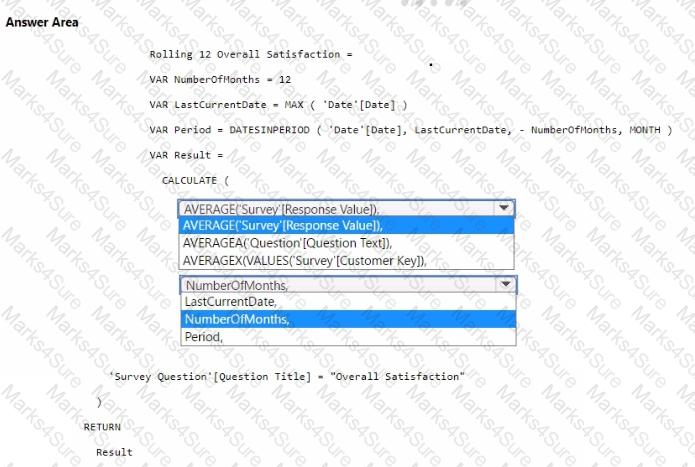

You need to create a DAX measure to calculate the average overall satisfaction score.

How should you complete the DAX code? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

You need to implement the date dimension in the data store. The solution must meet the technical requirements.

What are two ways to achieve the goal? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

What should you recommend using to ingest the customer data into the data store in the AnatyticsPOC workspace?

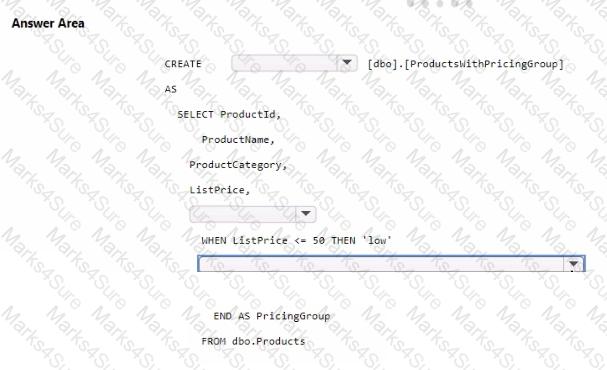

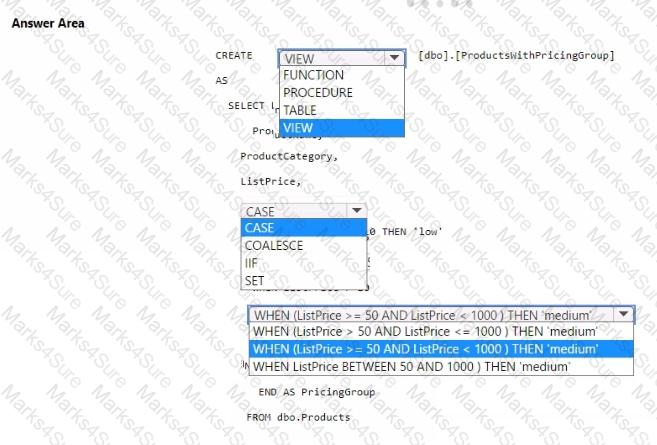

You need to resolve the issue with the pricing group classification.

How should you complete the T-SQL statement? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

What should you use to implement calculation groups for the Research division semantic models?

Which syntax should you use in a notebook to access the Research division data for Productlinel?

A)

B)

C)

D)

You need to refresh the Orders table of the Online Sales department. The solution must meet the semantic model requirements. What should you include in the solution?

You have a Fabric tenant that contains a semantic model. The model contains 15 tables.

You need to programmatically change each column that ends in the word Key to meet the following requirements:

• Hide the column.

• Set Nullable to False.

• Set Summarize By to None

• Set Available in MDX to False.

• Mark the column as a key column.

What should you use?

You need to ensure that Contoso can use version control to meet the data analytics requirements and the general requirements. What should you do?

You need to recommend a solution to group the Research division workspaces.

What should you include in the recommendation? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

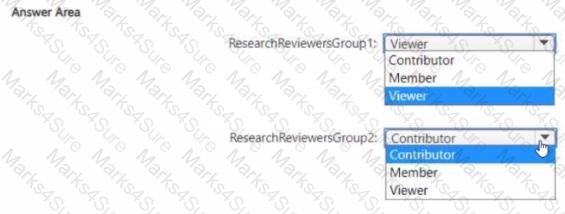

Which workspace rote assignments should you recommend for ResearchReviewersGroupl and ResearchReviewersGroupZ? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

You need to recommend which type of fabric capacity SKU meets the data analytics requirements for the Research division. What should you recommend?

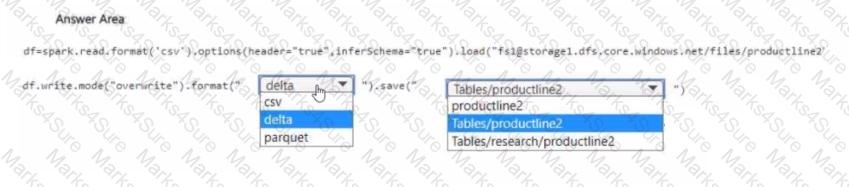

You need to migrate the Research division data for Productline2. The solution must meet the data preparation requirements. How should you complete the code? To answer, select the appropriate options in the answer area

NOTE: Each correct selection is worth one point.

You have a Fabric tenant that contains a semantic model. The model uses Direct Lake mode.

You suspect that some DAX queries load unnecessary columns into memory.

You need to identify the frequently used columns that are loaded into memory.

What are two ways to achieve the goal? Each correct answer presents a complete solution.

NOTE: Each correct answer is worth one point.

You have a Microsoft Power Bl semantic model that contains measures. The measures use multiple calculate functions and a filter function.

You are evaluating the performance of the measures.

In which use case will replacing the filter function with the keepfilters function reduce execution time?

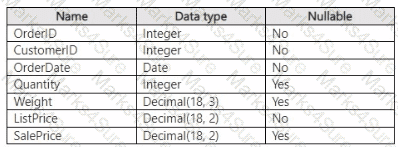

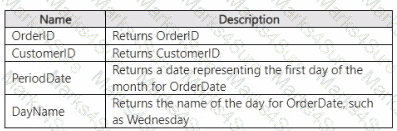

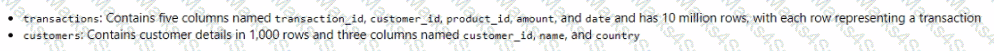

You have a Fabric warehouse that contains a table named Sales.Orders. Sales.Orders contains the following columns.

You need to write a T-SQL query that will return the following columns.

How should you complete the code? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

You have a Fabric tenant that contains a warehouse.

Several times a day. the performance of all warehouse queries degrades. You suspect that Fabric is throttling the compute used by the warehouse.

What should you use to identify whether throttling is occurring?

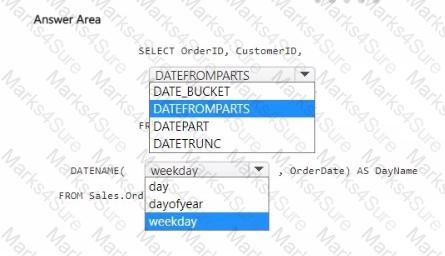

You have a data warehouse that contains a table named Stage. Customers. Stage-Customers contains all the customer record updates from a customer relationship management (CRM) system. There can be multiple updates per customer

You need to write a T-SQL query that will return the customer ID, name, postal code, and the last updated time of the most recent row for each customer ID.

How should you complete the code? To answer, select the appropriate options in the answer area,

NOTE Each correct selection is worth one point.

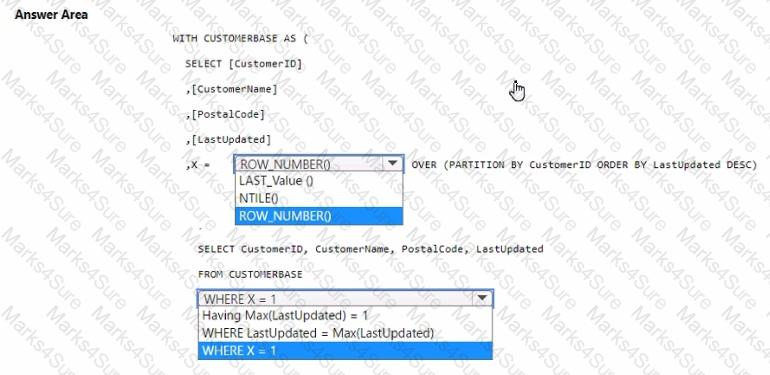

You are analyzing customer purchases in a Fabric notebook by using PySpanc You have the following DataFrames:

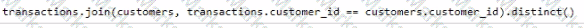

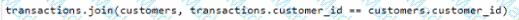

You need to join the DataFrames on the customer_id column. The solution must minimize data shuffling. You write the following code.

Which code should you run to populate the results DataFrame?

A)

B)

C)

D)

You have a Fabric tenant that contains a warehouse named DW1 and a lakehouse named LH1. DW1 contains a table named Sales.Product. LH1 contains a table named Sales.Orders.

You plan to schedule an automated process that will create a new point-in-time (PIT) table named Sales.ProductOrder in DW1. Sales.ProductOrder will be built by using the results of a query that will join Sales.Product and Sales.Orders.

You need to ensure that the types of columns in Sales. ProductOrder match the column types in the source tables. The solution must minimize the number of operations required to create the new table.

Which operation should you use?