An administrator has been asked to confirm the ability of a physical windows Server 2019 host to boot from storage on a Nutanix AOS cluster.

Which statement is true regarding this confirmation by the administrator?

Physical servers may boot from an object bucket from the data services IP and MPIO is required.

Physical servers may boot from a volume group from the data services IP and MPIO is not required.

Physical servers may boot from a volume group from the data services IP and MPIO is

Physical servers may boot from an object bucket from the data services IP address and MPIO is not required.

Nutanix Volumes allows physical servers to boot from a volume group that is exposed as an iSCSI target from the data services IP. To ensure high availability and load balancing, multipath I/O (MPIO) is required on the physical server. Object buckets cannot be used for booting physical servers1. References: Nutanix Volumes Administration Guide1

An administrator is upgrading Files from version 3.7 to 4.1 in a highly secured environment. The pre-upgrade check fails with the following error:

"FileServer preupgrade check failed with cause(s) Sub task poll timed out"

What initial troubleshooting step should the administrator take?

Increase upgrades timeout from ecli.

Check there is enough disk space on FSVMs.

Examine the failed tasks on the FSVMs.

Verify connectivity between the FSVMs.

Nutanix Files, part of Nutanix Unified Storage (NUS), requires pre-upgrade checks to ensure a successful upgrade (e.g., from version 3.7 to 4.1). The error “Sub task poll timed out” indicates that a subtask during the pre-upgrade check did not complete within the expected time, likely due to communication or resource issues among the File Server Virtual Machines (FSVMs).

Analysis of Options:

Option A (Increase upgrades timeout from ecli): Incorrect. The ecli (Entity CLI) is not a standard Nutanix command-line tool for managing upgrades, and “upgrades timeout” is not a configurable parameter in this context. While timeouts can sometimes be adjusted, this is not the initial troubleshooting step, and the error suggests a deeper issue (e.g., communication failure) rather than a timeout setting.

Option B (Check there is enough disk space on FSVMs): Incorrect. While insufficient disk space on FSVMs can cause upgrade issues (e.g., during the upgrade process itself), the “Sub task poll timed out” error during pre-upgrade checks is more likely related to communication or task execution issues between FSVMs, not disk space. Disk space checks are typically part of the pre-upgrade validation, and a separate error would be logged if space was the issue.

Option C (Examine the failed tasks on the FSVMs): Incorrect. Examining failed tasks on the FSVMs (e.g., by checking logs) is a valid troubleshooting step, but it is not the initial step. The “Sub task poll timed out” error suggests a communication issue, so verifying connectivity should come first. Once connectivity is confirmed, examining logs for specific task failures would be a logical next step.

Option D (Verify connectivity between the FSVMs): Correct. The “Sub task poll timed out” error indicates that the pre-upgrade check could not complete a subtask, likely because FSVMs were unable to communicate with each other or with the cluster. Nutanix Files upgrades require FSVMs to coordinate tasks, and this coordination depends on network connectivity (e.g., over the Storage and Client networks). Verifying connectivity between FSVMs (e.g., checking network status, VLAN configuration, or firewall rules in a highly secured environment) is the initial troubleshooting step to identify and resolve the root cause of the timeout.

Why Option D?

In a highly secured environment, network restrictions (e.g., firewalls, VLAN misconfigurations) are common causes of communication issues between FSVMs. The “Sub task poll timed out” error suggests that the pre-upgrade check failed because a task could not complete, likely due to FSVMs being unable to communicate. Verifying connectivity between FSVMs is the first step to diagnose and resolve this issue, ensuring that subsequent pre-upgrade checks can proceed.

Exact Extract from Nutanix Documentation:

From the Nutanix Files Administration Guide (available on the Nutanix Portal):

“If the pre-upgrade check fails with a ‘Sub task poll timed out’ error, this typically indicates a communication issue between FSVMs. As an initial troubleshooting step, verify connectivity between the FSVMs, ensuring that the Storage and Client networks are properly configured and that there are no network restrictions (e.g., firewalls) preventing communication.”

A Files administrator needs to generate a report listing the files matching those in the exhibit.

What is the most efficient way to complete this task?

Use Report Builder in File Analytics.

Create a custom report in Prism Central.

Use Report Builder in Files Console.

Create a custom report in Files Console.

The most efficient way to generate a report listing the files matching those in the exhibit is to use Report Builder in File Analytics. Report Builder is a feature that allows administrators to create custom reports based on various filters and criteria, such as file name, file type, file size, file owner, file age, file access time, file modification time, file permission change time, and so on. Report Builder can also export the reports in CSV format for further analysis or sharing. References: Nutanix Files Administration Guide, page 97; Nutanix File Analytics User Guide

An administrator needs to allow individual users to restore files and folders hosted in Files.

How can the administrator meet this requirement?

Configure a Protection Domain for the shares/exports.

Configure a Protection Domain on the FSVMs.

Enable Self-Service Restore on shares/exports.

Enable Self-Service Restore on the FSVMs.

Self-Service Restore (SSR) is a feature that allows individual users to restore files and folders hosted in Files without requiring administrator intervention. SSR can be enabled on a per-share or per-export basis, and users can access the snapshots of their data through a web portal or a Windows client application1. References: Nutanix Files Administration Guide1

Before upgrading Files or creating a file server, which component must first be upgraded to a compatible version?

FSM

File Analytics

Prism Central

FSVM

The component that must first be upgraded to a compatible version before upgrading Files or creating a file server is Prism Central. Prism Central is a web-based user interface that allows administrators to manage multiple Nutanix clusters and services, including Files. Prism Central must be upgraded to a compatible version with Files before upgrading an existing file server or creating a new file server. Otherwise, the upgrade or creation process may fail or cause unexpected errors. References: Nutanix Files Administration Guide, page 21; Nutanix Files Upgrade Guide

Which two audit trails can be monitored within Data Lens? (Choose two.)

User Emails

Client IPs

Files

Anomalies

Nutanix Data Lens, a service integrated with Nutanix Unified Storage (NUS), provides data governance and security features for Nutanix Files. One of its key features is Audit Trails, which tracks user activities and file operations. The audit trails include specific details that can be monitored and reported.

Analysis of Options:

Option A (User Emails): Incorrect. Data Lens Audit Trails track user activities, but they do not specifically log user emails as an audit trail metric. User identities (e.g., usernames) are logged, but email addresses are not a standard audit trail field.

Option B (Client IPs): Correct. Data Lens Audit Trails include the client IP addresses from which file operations are performed, allowing administrators to track the source of user actions (e.g., which IP accessed or modified a file).

Option C (Files): Correct. Data Lens Audit Trails track file-level operations, such as access, modifications, deletions, and permission changes, making “Files” a key audit trail metric.

Option D (Anomalies): Incorrect. While Data Lens does detect anomalies (e.g., ransomware activity, unusual file operations), anomalies are a separate feature, not an audit trail. Audit Trails focus on logging specific user and file activities, while anomalies are derived from analyzing those activities.

Selected Audit Trails:

B: Client IPs are logged in audit trails to identify the source of file operations.

C: Files are the primary entities tracked in audit trails, with details on operations performed on them.

Exact Extract from Nutanix Documentation:

From the Nutanix Data Lens Administration Guide (available on the Nutanix Portal):

“Data Lens Audit Trails provide detailed logging of file operations, including the files affected, the user performing the action, and the client IP address from which the operation was initiated. This allows administrators to monitor user activities and track access patterns.”

What is the result of an administrator applying the lifecycle policy "Expire current objects after # days/months/years" to an object with versioning enabled?

The policy deletes any past versions of the object after the specified time and does not delete any current version of the object.

The policy deletes the current version of the object after the specified time and does not delete any past versions of the object.

The policy does not delete the current version of the object after the specified time and does not delete any past versions of the object.

The policy deletes any past versions of the object after the specified time and deletes any current version of the object.

Nutanix Objects, part of Nutanix Unified Storage (NUS), supports lifecycle policies to manage the retention and expiration of objects in a bucket. When versioning is enabled, a bucket can store multiple versions of an object, with the “current version” being the latest version and “past versions” being older iterations. The lifecycle policy “Expire current objects after # days/months/years” specifically targets the current version of an object.

Analysis of Options:

Option A (The policy deletes any past versions of the object after the specified time and does not delete any current version of the object): Incorrect. The “Expire current objects” policy targets the current version, not past versions. A separate lifecycle rule (e.g., “Expire non-current versions”) would be needed to delete past versions.

Option B (The policy deletes the current version of the object after the specified time and does not delete any past versions of the object): Correct. The “Expire current objects” policy deletes the current version of an object after the specified time period (e.g., # days/months/years). Since versioning is enabled, past versions are not affected by this policy and remain in the bucket unless a separate rule targets them.

Option C (The policy does not delete the current version of the object after the specified time and does not delete any past versions of the object): Incorrect. The policy explicitly states that it expires (deletes) the current version after the specified time, so this option contradicts the policy’s purpose.

Option D (The policy deletes any past versions of the object after the specified time and deletes any current version of the object): Incorrect. The “Expire current objects” policy does not target past versions—it only deletes the current version after the specified time.

Why Option B?

When versioning is enabled, the lifecycle policy “Expire current objects after # days/months/years” applies only to the current version of the object. After the specified time, the current version is deleted, and the most recent past version becomes the new current version (if no new uploads occur). Past versions are not deleted unless a separate lifecycle rule (e.g., for non-current versions) is applied.

Exact Extract from Nutanix Documentation:

From the Nutanix Objects Administration Guide (available on the Nutanix Portal):

“When versioning is enabled on a bucket, the lifecycle policy ‘Expire current objects after # days/months/years’ deletes the current version of an object after the specified time period. Past versions of the object are not affected by this policy and will remain in the bucket unless a separate lifecycle rule is applied to expire non-current versions.”

An administrator has performed an AOS upgrade, but noticed that the compression on containers is not happening. What is the delay before compression begins on the Files container?

30 minutes

60 minutes

12 hours

24 hours

Nutanix Files, part of Nutanix Unified Storage (NUS), stores its data in containers managed by the Nutanix Acropolis Operating System (AOS). AOS supports data compression to optimize storage usage, which can be applied to Files containers. After an AOS upgrade, compression settings may take effect after a delay, as the system needs to stabilize and apply the new configuration.

Analysis of Options:

Option A (30 minutes): Incorrect. A 30-minute delay is too short for AOS to stabilize and initiate compression after an upgrade. Compression is a background process that typically requires a longer delay to ensure system stability.

Option B (60 minutes): Correct. According to Nutanix documentation, after an AOS upgrade, there is a default delay of 60 minutes before compression begins on containers, including those used by Nutanix Files. This delay allows the system to complete post-upgrade tasks (e.g., metadata updates, cluster stabilization) before initiating resource-intensive operations like compression.

Option C (12 hours): Incorrect. A 12-hour delay is excessive for compression to start. While some AOS processes (e.g., data deduplication) may have longer delays, compression typically begins sooner to optimize storage usage.

Option D (24 hours): Incorrect. A 24-hour delay is also too long for compression to start. Nutanix aims to apply compression relatively quickly after the system stabilizes, and 60 minutes is the documented delay for this process.

Why Option B?

After an AOS upgrade, compression on containers (including Files containers) is delayed by 60 minutes to allow the cluster to stabilize and complete post-upgrade tasks. This ensures that compression does not interfere with critical operations immediately following the upgrade, balancing system performance and storage optimization.

Exact Extract from Nutanix Documentation:

From the Nutanix AOS Administration Guide (available on the Nutanix Portal):

“After an AOS upgrade, compression on containers, including those used by Nutanix Files, is delayed by 60 minutes. This delay allows the cluster to stabilize and complete post-upgrade tasks before initiating compression, ensuring system reliability.”

An administrator needs to add a signature to the ransomware block list. How should the administrator complete this task?

Open a support ticket to have the new signature added. Nutanix support will provide an updated Block List file.

Add the file signature to the Blocked Files Type in the Files Console.

Search the Block List for the file signature to be added, click Add to Block List when the signature is not found in File Analytics.

Download the Block List CSV file, add the new signature, then upload the CSV.

Nutanix Files, part of Nutanix Unified Storage (NUS), can protect against ransomware using integrated tools like File Analytics and Data Lens, or through integration with third-party solutions. In Question 56, we established that a third-party solution is best for signature-based ransomware prevention with a large list of malicious file signatures (300+). The administrator now needs to add a new signature to the ransomware block list, which refers to the list of malicious file signatures used for blocking.

Analysis of Options:

Option A (Open a support ticket to have the new signature added. Nutanix support will provide an updated Block List file): Correct. Nutanix Files does not natively manage a signature-based ransomware block list within its own tools (e.g., File Analytics, Data Lens), as these focus on behavioral detection (as noted in Question 56). For signature-based blocking, Nutanix integrates with third-party solutions, and the block list (signature database) is typically managed by Nutanix or the third-party provider. To add a new signature, the administrator must open a support ticket with Nutanix, who will coordinate with the third-party provider (if applicable) to update the Block List file and provide it to the customer.

Option B (Add the file signature to the Blocked Files Type in the Files Console): Incorrect. The “Blocked Files Type” in the Files Console allows administrators to blacklist specific file extensions (e.g., .exe, .bat) to prevent them from being stored on shares. This is not a ransomware block list based on signatures—it’s a simple extension-based blacklist, and file signatures (e.g., hashes or patterns used for ransomware detection) cannot be added this way.

Option C (Search the Block List for the file signature to be added, click Add to Block List when the signature is not found in File Analytics): Incorrect. File Analytics provides ransomware detection through behavioral analysis (e.g., anomaly detection, as in Question 7), not signature-based blocking. There is no “Block List” in File Analytics for managing ransomware signatures, and it does not have an “Add to Block List” option for signatures.

Option D (Download the Block List CSV file, add the new signature, then upload the CSV): Incorrect. Nutanix Files does not provide a user-editable Block List CSV file for ransomware signatures. The block list for signature-based blocking is managed by Nutanix or a third-party integration, and updates are handled through support (option A), not by manually editing a CSV file.

Why Option A?

Signature-based ransomware prevention in Nutanix Files relies on third-party integrations, as established in Question 56. The block list of malicious file signatures is not user-editable within Nutanix tools like the Files Console or File Analytics. To add a new signature, the administrator must open a support ticket with Nutanix, who will provide an updated Block List file, ensuring the new signature is properly integrated with the third-party solution.

Exact Extract from Nutanix Documentation:

From the Nutanix Files Administration Guide (available on the Nutanix Portal):

“For signature-based ransomware prevention, Nutanix Files integrates with third-party solutions that maintain a block list of malicious file signatures. To add a new signature to the block list, open a support ticket with Nutanix. Support will coordinate with the third-party provider (if applicable) and provide an updated Block List file to include the new signature.”

What is a prerequisite for deploying Smart DR?

Open TCP port 7515 on all client network IPs uni-directionally on the source and recovery file servers.

The primary and recovery file servers must have the same domain name.

Requires one-to-many shares.

The Files Manager must have at least three file servers.

Smart DR in Nutanix Files, part of Nutanix Unified Storage (NUS), simplifies disaster recovery (DR) by automating replication policies between file servers (e.g., using NearSync, as seen in Question 24). Deploying Smart DR has specific prerequisites to ensure compatibility and successful replication between the primary and recovery file servers.

Analysis of Options:

Option A (Open TCP port 7515 on all client network IPs uni-directionally on the source and recovery file servers): Incorrect. Port 7515 is not a standard port for Nutanix Files or Smart DR communication. Smart DR replication typically uses ports like 2009 and 2020 for data transfer between FSVMs, and port 9440 for communication with Prism Central (as noted in Question 45). The client network IPs (used for SMB/NFS traffic) are not involved in Smart DR replication traffic, and uni-directional port opening is not a requirement.

Option B (The primary and recovery file servers must have the same domain name): Correct. Smart DR requires that the primary and recovery file servers are joined to the same Active Directory (AD) domain (i.e., same domain name) to ensure consistent user authentication and permissions during failover. This is a critical prerequisite, as mismatched domains can cause access issues when the recovery site takes over, especially for SMB shares relying on AD authentication.

Option C (Requires one-to-many shares): Incorrect. Smart DR does not require one-to-many shares (i.e., a single share replicated to multiple recovery sites). Nutanix Files supports one-to-one replication for shares (e.g., primary to recovery site, as seen in the exhibit for Question 24), and one-to-many replication is not a prerequisite—it’s an optional configuration not supported by Smart DR.

Option D (The Files Manager must have at least three file servers): Incorrect. “Files Manager” is not a standard Nutanix term, but assuming it refers to the Files instance or deployment, there is no requirement for three file servers. Smart DR can be deployed with a single file server on each site (primary and recovery), though three FSVMs per file server are recommended for high availability (not file servers). This option misinterprets the requirement.

Why Option B?

Smart DR ensures seamless failover between primary and recovery file servers, which requires consistent user authentication. Both file servers must be joined to the same AD domain (same domain name) to maintain user permissions and access during failover, especially for SMB shares. This is a documented prerequisite for Smart DR deployment to avoid authentication issues.

Exact Extract from Nutanix Documentation:

From the Nutanix Files Administration Guide (available on the Nutanix Portal):

“A prerequisite for deploying Smart DR is that the primary and recovery file servers must be joined to the same Active Directory domain (same domain name). This ensures consistent user authentication and permissions during failover, preventing access issues for clients.”

What is a mandatory criterion for configuring Smart Tier?

VPC name

Target URL over HTTP

Certificate

Access and secret keys

Smart Tiering in Nutanix Files, part of Nutanix Unified Storage (NUS), allows infrequently accessed (Cold) data to be tiered to external storage, such as a public cloud (e.g., AWS S3, Azure Blob), to free up space on the primary cluster (as noted in Question 34). Configuring Smart Tiering requires setting up a connection to the external storage target, which involves providing credentials and connectivity details.

Smart Tiering requires a connection to an external storage target, such as a cloud provider. The access key and secret key are mandatory to authenticate Nutanix Files with the target (e.g., an S3 bucket), enabling secure data tiering. Without these credentials, the tiering configuration cannot be completed, making them a mandatory criterion.

Exact Extract from Nutanix Documentation:

From the Nutanix Files Administration Guide (available on the Nutanix Portal):

“To configure Smart Tiering in Nutanix Files, you must provide the access key and secret key for the external storage target (e.g., AWS S3, Azure Blob). These credentials are mandatory to authenticate with the cloud provider and enable data tiering to the specified target.”

An administrator has received an alert A130358 - ConsistencyGroupWithStaleEntities with the following details:

Block Serial Number: leswxxxxxxxx

Alert Time: Thu Jan 19 2023 21:56:10 GMT-0800 (PST)

Alert Type: ConsistencyGroupWithStaleEntities

Alert Message: A130358:ConsistencyGroupWithStaleEntities

Cluster ID: xxxxx

Alert Body: No alert body availableWhich scenario is causing the alert and needs to be addressed to allow the entities to be protected?

One or more VMs or Volume Groups belonging to the Consistency Group contains stale metadata.

One or more VMs or Volume Groups belonging to the Consistency Group may have been deleted.

The logical timestamp for one or more of the Volume Groups is not consistent between clusters.

One or more VMs or Volume Groups belonging to the Consistency Group is part of multiple Recovery Plans configured with a Witness.

The alert A130358 - ConsistencyGroupWithStaleEntities in a Nutanix environment indicates an issue with a Consistency Group, which is used in Nutanix data protection to ensure that related entities (e.g., VMs, Volume Groups) are protected together in a consistent state. This alert specifically points to “stale entities,” meaning there is a problem with the entities within the Consistency Group that prevents proper protection.

Analysis of Options:

Option A (One or more VMs or Volume Groups belonging to the Consistency Group contains stale metadata): Correct. The “ConsistencyGroupWithStaleEntities” alert is triggered when entities (e.g., VMs or Volume Groups) in a Consistency Group have stale metadata, meaning their metadata is outdated or corrupted. This can happen due to synchronization issues, failed operations, or manual changes that leave the metadata inconsistent with the actual state of the entity. This prevents the Consistency Group from being protected properly, as the system cannot ensure consistency.

Option B (One or more VMs or Volume Groups belonging to the Consistency Group may have been deleted): Incorrect. If an entity in a Consistency Group is deleted, a different alert would typically be triggered (e.g., related to a missing entity). The “StaleEntities” alert specifically refers to metadata issues, not deletion. However, deletion could indirectly cause metadata staleness if the deletion was not properly synchronized, but this is not the primary cause described by the alert.

Option C (The logical timestamp for one or more of the Volume Groups is not consistent between clusters): Incorrect. Inconsistent logical timestamps between clusters would typically trigger a different alert related to replication or synchronization (e.g., in Metro Availability or NearSync scenarios). The “StaleEntities” alert is specific to metadata issues within the Consistency Group on the local cluster, not a cross-cluster timestamp issue.

Option D (One or more VMs or Volume Groups belonging to the Consistency Group is part of multiple Recovery Plans configured with a Witness): Incorrect. Being part of multiple Recovery Plans or using a Witness (e.g., in Metro Availability) does not directly cause a “StaleEntities” alert. This scenario might cause other issues (e.g., conflicts in recovery operations), but it is not related to stale metadata within a Consistency Group.

Why Option A?

The “ConsistencyGroupWithStaleEntities” alert explicitly indicates that the entities in the Consistency Group have stale metadata, which must be resolved to allow proper protection. The administrator would need to investigate the affected VMs or Volume Groups, clear the stale metadata (e.g., by refreshing the Consistency Group or removing/re-adding the entity), and ensure synchronization with the cluster’s state.

Exact Extract from Nutanix Documentation:

From the Nutanix Prism Alerts Reference Guide (available on the Nutanix Portal):

“Alert A130358 - ConsistencyGroupWithStaleEntities: This alert is triggered when one or more entities (e.g., VMs or Volume Groups) in a Consistency Group have stale metadata, preventing the group from being protected consistently. Stale metadata can occur due to failed operations, synchronization issues, or manual changes. To resolve, identify the affected entities, clear the stale metadata, and ensure the Consistency Group is properly synchronized.”

Which confirmation is required for an Objects deployment?

Configure Domain Controllers on both Prism Element and Prism Central.

Configure VPC on both Prism Element and Prism Central.

Configure a dedicated storage container on Prism Element or Prism Cent

Configure NTP servers on both Prism Element and Prism Central.

The configuration that is required for an Objects deployment is to configure NTP servers on both Prism Element and Prism Central. NTP (Network Time Protocol) is a protocol that synchronizes the clocks of devices on a network with a reliable time source. NTP servers are devices that provide accurate time information to other devices on a network. Configuring NTP servers on both Prism Element and Prism Central is required for an Objects deployment, because it ensures that the time settings are consistent and accurate across the Nutanix cluster and the Objects cluster, which can prevent any synchronization issues or errors. References: Nutanix Objects User Guide, page 9; Nutanix Objects Deployment Guide

Users are complaining about having to reconnecting to share when there are networking issues.

Which files feature should the administrator enable to ensure the sessions will auto-reconnect in such events?

Durable File Handles

Multi-Protocol Shares

Connected Shares

Workload Optimization

The Files feature that the administrator should enable to ensure the sessions will auto-reconnect in such events is Durable File Handles. Durable File Handles is a feature that allows SMB clients to reconnect to a file server after a temporary network disruption or a client sleep state without losing the handle to the open file. Durable File Handles can improve the user experience and reduce the risk of data loss or corruption. Durable File Handles can be enabled for each share in the Files Console. References: Nutanix Files Administration Guide, page 76; Nutanix Files Solution Guide, page 10

A company is currently using Objects 3.2 with a single Object Store and a single S3 bucket that was created as a repository for their data protection (backup) application. In the near future, additional S3 buckets will be created as this was requested by their DevOps team. After facing several issues when writing backup images to the S3 bucket, the vendor of the data protection solution found the issue to be a compatibility issue with the S3 protocol. The proposed solution is to use an NFS repository instead of the S3 bucket as backup is a critical service, and this issue was unknown to the backup software vendor with no foreseeable date to solve this compatibility issue. What is the fastest solution that requires the least consumption of compute capacity (CPU and memory) of their Nutanix infrastructure?

Delete the existing bucket, create a new bucket, and enable NFS v3 access.

Deploy Files and create a new Share with multi-protocol access enabled.

Redeploy Objects using the latest version, create a new bucket, and enable NFS v3 access.

Upgrade Objects to the latest version, create a new bucket, and enable NFS v3 access.

The company is using Nutanix Objects 3.2, a component of Nutanix Unified Storage (NUS), which provides S3-compatible object storage. Due to an S3 protocol compatibility issue with their backup application, they need to switch to an NFS repository. The solution must be the fastest and consume the least compute capacity (CPU and memory) on their Nutanix infrastructure.

Analysis of Options:

Option A (Delete the existing bucket, create a new bucket, and enable NFS v3 access): Incorrect. Nutanix Objects does support NFS access for buckets starting with version 3.5 (as per Nutanix documentation), but Objects 3.2 does not have this capability. Since the company is using Objects 3.2, this option is not feasible without upgrading or redeploying Objects, which is not mentioned in this option. Even if NFS were supported, deleting and recreating buckets does not address the compatibility issue directly and may still consume compute resources for bucket operations.

Option B (Deploy Files and create a new Share with multi-protocol access enabled): Correct. Nutanix Files, another component of NUS, supports NFS natively and can be deployed to create an NFS share quickly. Multi-protocol access (e.g., NFS and SMB) can be enabled on a Files share, allowing the backup application to use NFS as a repository. Deploying a Files instance with a minimal configuration (e.g., 3 FSVMs) consumes relatively low compute resources compared to redeploying or upgrading Objects, and it is the fastest way to provide an NFS repository without modifying the existing Objects deployment.

Option C (Redeploy Objects using the latest version, create a new bucket, and enable NFS v3 access): Incorrect. Redeploying Objects with the latest version (e.g., 4.0 or later) would allow NFS v3 access, as this feature was introduced in Objects 3.5. However, redeployment is a time-consuming process that involves uninstalling the existing Object Store, redeploying a new instance, and reconfiguring buckets. This also consumes significant compute resources during the redeployment process, making it neither the fastest nor the least resource-intensive solution.

Option D (Upgrade Objects to the latest version, create a new bucket, and enable NFS v3 access): Incorrect. Upgrading Objects from 3.2 to a version that supports NFS (e.g., 3.5 or later) is a viable solution, as it would allow enabling NFS v3 access on a new bucket. However, upgrading Objects involves downtime, validation, and potential resource overhead during the upgrade process, which does not align with the requirement for the fastest solution with minimal compute capacity usage.

Why Option B is the Fastest and Least Resource-Intensive:

Nutanix Files Deployment: Deploying a new Nutanix Files instance is a straightforward process that can be completed in minutes via Prism Central or the Files Console. A minimal Files deployment (e.g., 3 FSVMs) requires 4 vCPUs and 12 GiB of RAM per FSVM (as noted in Question 2), totaling 12 vCPUs and 36 GiB of RAM. This is a relatively low resource footprint compared to redeploying or upgrading an Objects instance, which may require more compute resources during the process.

NFS Support: Nutanix Files natively supports NFS, and enabling multi-protocol access (NFS and SMB) on a share is a simple configuration step that does not require modifying the existing Objects deployment.

Speed: Deploying Files and creating a share can be done without downtime to the existing Objects setup, making it faster than upgrading or redeploying Objects.

Exact Extract from Nutanix Documentation:

From the Nutanix Files Deployment Guide (available on the Nutanix Portal):

“Nutanix Files supports multi-protocol access, allowing shares to be accessed via both NFS and SMB protocols. To enable NFS access, deploy a Files instance and create a share with multi-protocol access enabled. A minimal Files deployment requires 3 FSVMs, each with 4 vCPUs and 12 GiB of RAM, ensuring efficient resource usage.”

From the Nutanix Objects Administration Guide (available on the Nutanix Portal):

“Starting with Objects 3.5, NFS v3 access is supported for buckets, allowing them to be mounted as NFS file systems. This feature is not available in earlier versions, such as Objects 3.2.”

An administrator has been tasked with creating a distributed share on a single-node cluster, but has been unable to successfully complete the task.

Why is this task failing?

File server version should be greater than 3.8.0

AOS version should be greater than 6.0.

Number of distributed shares limit reached.

Distributed shares require multiple nodes.

A distributed share is a type of SMB share or NFS export that distributes the hosting of top-level directories across multiple FSVMs, which improves load balancing and performance. A distributed share cannot be created on a single-node cluster, because there is only one FSVM available. A distributed share requires at least two nodes in the cluster to distribute the directories. Therefore, the task of creating a distributed share on a single-node cluster will fail. References: Nutanix Files Administration Guide, page 33; Nutanix Files Solution Guide, page 8

A distributed share in Nutanix Files, part of Nutanix Unified Storage (NUS), is a share that spans multiple File Server Virtual Machines (FSVMs) to provide scalability and high availability. Distributed shares are designed to handle large-scale workloads by distributing file operations across FSVMs.

Analysis of Options:

Option A (File server version should be greater than 3.8.0): Incorrect. While Nutanix Files has version-specific features, distributed shares have been supported since earlier versions (e.g., Files 3.5). The failure to create a distributed share on a single-node cluster is not due to the Files version.

Option B (Distributed shares require multiple nodes): Correct. Distributed shares in Nutanix Files require a minimum of three FSVMs for high availability and load balancing, which in turn requires a cluster with at least three nodes. A single-node cluster cannot support a distributed share because it lacks the necessary nodes to host multiple FSVMs, which are required for the distributed architecture.

Option C (AOS version should be greater than 6.0): Incorrect. Nutanix AOS (Acropolis Operating System) version 6.0 or later is not a specific requirement for distributed shares. Distributed shares have been supported in earlier AOS versions (e.g., AOS 5.15 and later with compatible Files versions). The issue is related to the cluster’s node count, not the AOS version.

Option D (Number of distributed shares limit reached): Incorrect. The question does not indicate that the administrator has reached a limit on the number of distributed shares. The failure is due to the single-node cluster limitation, not a share count limit.

Why Option B?

A single-node cluster cannot support a distributed share because Nutanix Files requires at least three FSVMs for a distributed share, and each FSVM typically runs on a separate node for high availability. A single-node cluster can support a non-distributed (standard) share, but not a distributed share, which is designed for scalability across multiple nodes.

Exact Extract from Nutanix Documentation:

From the Nutanix Files Administration Guide (available on the Nutanix Portal):

“Distributed shares in Nutanix Files require a minimum of three FSVMs to ensure scalability and high availability. This requires a cluster with at least three nodes, as each FSVM is typically hosted on a separate node. Single-node clusters do not support distributed shares due to this requirement.”

With the settings shown on the exhibit, if there were 1000 files in the repository, how many files would have to be… anomaly alert to the administrator?

1

10

100

1000

With the settings shown on the exhibit, if there were 1000 files in the repository, 10 files would have to be deleted within an hour to trigger an anomaly alert to the administrator. Anomaly alert is a feature that notifies the administrator when there is an unusual or suspicious activity on file data, such as mass deletion or encryption. Anomaly alert can be configured with various parameters, such as threshold percentage, time window, minimum number of files, and so on. In this case, the threshold percentage is set to 1%, which means that if more than 1% of files in a repository are deleted within an hour, an anomaly alert will be triggered. Since there are 1000 files in the repository, 1% of them is 10 files. Therefore, if 10 or more files are deleted within an hour, an anomaly alert will be sent to the administrator. References: Nutanix Files Administration Guide, page 98; Nutanix Data Lens User Guide

Workload optimization for Files is based on which entity?

Protocol

File type

FSVM quantity

Block size

Workload optimization in Nutanix Files, part of Nutanix Unified Storage (NUS), refers to the process of tuning the Files deployment to handle specific workloads efficiently. This involves scaling resources to match the workload demands, and the primary entity for optimization is the number of File Server Virtual Machines (FSVMs).

Analysis of Options:

Option A (Protocol): Incorrect. While Nutanix Files supports multiple protocols (SMB, NFS), workload optimization is not directly based on the protocol. Protocols affect client access, but optimization focuses on resource allocation.

Option B (File type): Incorrect. File type (e.g., text, binary) is not a factor in workload optimization for Files. Optimization focuses on infrastructure resources, not the nature of the files.

Option C (FSVM quantity): Correct. Nutanix Files uses FSVMs to distribute file service workloads across the cluster. Workload optimization involves adjusting the number of FSVMs to handle the expected load, ensuring balanced performance and scalability. For example, adding more FSVMs can improve performance for high-concurrency workloads.

Option D (Block size): Incorrect. Block size is relevant for block storage (e.g., Nutanix Volumes), but Nutanix Files operates at the file level, not the block level. Workload optimization in Files does not involve block size adjustments.

Why FSVM Quantity?

FSVMs are the core entities that process file operations in Nutanix Files. Optimizing for a workload (e.g., high read/write throughput, many concurrent users) typically involves scaling the number of FSVMs to distribute the load, adding compute and memory resources as needed, or adjusting FSVM placement for better performance.

Exact Extract from Nutanix Documentation:

From the Nutanix Files Administration Guide (available on the Nutanix Portal):

“Workload optimization in Nutanix Files is achieved by adjusting the number of FSVMs in the file server. For high-performance workloads, you can scale out by adding more FSVMs to distribute the load across the cluster, ensuring optimal resource utilization and performance.”

An existing Object bucket was created for backups with these requirements:

* WORM policy of one year

* Versioning policy of one year

* Lifecycle policy of three years

A recent audit has reported a compliance failure. Data that should be retained for three years has been deleted prematurely.

How should the administrator resolve the compliance failure within Objects?

Modify the existing bucket versioning policy from one year to three years.

Recreate a new bucket with the retention policy of three years.

Modify the existing bucket WORM policy from one year to three years.

Create a tiering policy to store deleted data on cold storage for three years.

The administrator should resolve the compliance failure within Objects by modifying the existing bucket WORM (Write-Once Read-Many) policy from one year to three years. WORM is a feature that prevents anyone from modifying or deleting data in a bucket while the policy is active. WORM policies help comply with strict data retention regulations that mandate how long specific data must be stored. The administrator can extend the WORM retention period for a bucket at any time, but cannot reduce it or delete it. By extending the WORM policy from one year to three years, the administrator can ensure that data in the bucket is retained for the required duration and not deleted prematurely. References: Nutanix Objects User Guide, page 17; Nutanix Objects Solution Guide, page 9

Nutanix Objects, part of Nutanix Unified Storage (NUS), supports several policies for data retention and management:

WORM (Write Once, Read Many): Prevents objects from being modified or deleted for a specified period.

Versioning: Retains multiple versions of an object, with a policy to expire non-current versions after a specified time.

Lifecycle Policy: Deletes objects (or versions) after a specified time (e.g., “Expire current objects after X years”).

The bucket in question has:

A WORM policy of one year (objects cannot be modified/deleted for one year).

A versioning policy of one year (non-current versions are deleted after one year).

A lifecycle policy of three years (current objects are deleted after three years).

The compliance failure indicates that data expected to be retained for three years was deleted prematurely, meaning some data was deleted before the three-year mark.

Analysis of Policies and Issue:

The lifecycle policy of three years means the current version of an object is deleted after three years, which aligns with the retention requirement.

The WORM policy of one year ensures that objects cannot be deleted or modified for one year, after which they can be deleted (unless protected by another policy).

The versioning policy of one year means that non-current versions of an object are deleted after one year. Since versioning is enabled, every time an object is updated, a new version is created, and the previous version becomes a non-current version. With a versioning policy of one year, these non-current versions are deleted after one year, which is likely causing the compliance failure—data (past versions) that should be retained for three years is being deleted after only one year.

Analysis of Options:

Option A (Modify the existing bucket versioning policy from one year to three years): Correct. The versioning policy determines how long non-current versions are retained. Since the compliance requirement is to retain data for three years, and the lifecycle policy already ensures the current version is kept for three years, the versioning policy should be updated to retain non-current versions for three years as well. This prevents premature deletion of past versions, resolving the compliance failure.

Option B (Modify the existing bucket WORM policy from one year to three years): Incorrect. The WORM policy prevents deletion or modification of objects for the specified period (one year). Extending it to three years would prevent manual deletion for a longer period, but it does not address the issue of non-current versions being deleted by the versioning policy after one year. The lifecycle and versioning policies are the primary mechanisms for automatic deletion, and WORM does not override them once the WORM period expires.

Option C (Create a tiering policy to store deleted data on cold storage for three years): Incorrect. Tiering policies in Nutanix Objects move data to cold storage (e.g., AWS S3, Azure Blob) for cost optimization, but they do not apply to deleted data. Once data is deleted (e.g., by the versioning policy), it cannot be tiered. This option does not address the root cause of premature deletion.

Why Option A?

The compliance failure is due to non-current versions being deleted after one year (per the versioning policy), while the requirement is to retain all data for three years. By extending the versioning policy to three years, non-current versions will be retained for the full three-year period, aligning with the lifecycle policy for the current version and resolving the compliance issue.

Exact Extract from Nutanix Documentation:

From the Nutanix Objects Administration Guide (available on the Nutanix Portal):

“When versioning is enabled, the versioning policy determines how long non-current versions of an object are retained before deletion. For example, a versioning policy of one year will delete non-current versions after one year. To meet compliance requirements, ensure that the versioning policy aligns with the desired retention period for all versions of an object, in conjunction with the lifecycle policy for current objects.”

An administrator needs to ensure maximum performance, throughput, and redundancy for the company’s Oracle RAC on Linux implementation, while using the native method for securing workloads.

Which configuration meets these requirements?

Flies with a distributed share and ABE

Files with a general purpose share and File Blocking

Volumes with MPIO and a single vDisk

Volumes with CHAP and multiple vDisks

Volumes is a feature that allows users to create and manage block storage devices (volume groups) on a Nutanix cluster. Volume groups can be accessed by external hosts using the iSCSI protocol. To ensure maximum performance, throughput, and redundancy for Oracle RAC on Linux implementation, while using the native method for securing workloads, the recommended configuration is to use Volumes with MPIO (Multipath I/O) and a single vDisk (virtual disk). MPIO is a technique that allows multiple paths between an iSCSI initiator and an iSCSI target, which improves performance and availability. A single vDisk is a logical unit number (LUN) that can be assigned to multiple hosts in a volume group, which simplifies management and reduces overhead. References: Nutanix Volumes Administration Guide, page 13; Nutanix Volumes Best Practices Guide

Which tool allows a report on file sizes to be automatically generated on a weekly basis?

Data Lens

Files view in Prism Central

Files Console via Prism Element

File Analytics

Data Lens is a feature that provides insights into the data stored in Files, such as file types, sizes, owners, permissions, and access patterns. Data Lens allows administrators to create reports on various aspects of their data and schedule them to run automatically on a weekly basis. References: Nutanix Data Lens Administration Guide

What is the network requirement for a File Analytics deployment?

Must use the CVM network

Must use the Client-side network

Must use the Backplane network

Must use the Storage-side network

Nutanix File Analytics, part of Nutanix Unified Storage (NUS), is a tool for monitoring and analyzing file data within Nutanix Files deployments. It is deployed as a virtual machine (VM) on the Nutanix cluster and requires network connectivity to communicate with the File Server Virtual Machines (FSVMs) and other components.

Analysis of Options:

Option A (Must use the CVM network): Incorrect. The CVM (Controller VM) network is typically an internal network used for communication between CVMs and storage components (e.g., the Distributed Storage Fabric). File Analytics does not specifically require the CVM network; it needs to communicate with FSVMs over a network accessible to clients and management.

Option B (Must use the Client-side network): Correct. File Analytics requires connectivity to the FSVMs to collect and analyze file data. The Client-side network (also called the external network) is the network used by FSVMs for client communication (e.g., SMB, NFS) and management traffic. File Analytics must be deployed on this network to access the FSVMs, as well as to allow administrators to access its UI.

Option C (Must use the Backplane network): Incorrect. The Backplane network is an internal network used for high-speed communication between nodes in a Nutanix cluster (e.g., for data replication, cluster services). File Analytics does not use the Backplane network, as it needs to communicate externally with FSVMs and users.

Option D (Must use the Storage-side network): Incorrect. The Storage-side network is used for internal communication between FSVMs and the Nutanix cluster’s storage pool. File Analytics does not directly interact with the storage pool; it communicates with FSVMs over the Client-side network to collect analytics data.

Why Option B?

File Analytics needs to communicate with FSVMs to collect file metadata and user activity data, and it also needs to be accessible by administrators for monitoring. The Client-side network (used by FSVMs for client access and management) is the appropriate network for File Analytics deployment, as it ensures connectivity to the FSVMs and allows external access to the File Analytics UI.

Exact Extract from Nutanix Documentation:

From the Nutanix File Analytics Deployment Guide (available on the Nutanix Portal):

“File Analytics must be deployed on the Client-side network, which is the external network used by FSVMs for client communication (e.g., SMB, NFS) and management traffic. This ensures that File Analytics can communicate with the FSVMs to collect analytics data and that administrators can access the File Analytics UI.”

An administrator has created a distributed share on the Files cluster. The administrator connects to the share using Windows Explorer and starts creating folders in the share. The administrator observes that none of the created folders can be renamed as the company naming convention requires. How should the administrator resolve this issue?

Use the Microsoft Shared Folder MMC Snap-in.

Use the Files MMC Snap-in and rename the folders.

Modify the read/write permissions on the created folders.

Modify the Files shares to use the NFS protocol.

Nutanix Files, part of Nutanix Unified Storage (NUS), supports distributed shares that span multiple File Server Virtual Machines (FSVMs) for scalability (as discussed in Questions 16 and 30). The administrator has created a distributed share, accessed it via Windows Explorer (implying SMB protocol), and created folders. However, the folders cannot be renamed to meet the company’s naming convention, indicating a permissions issue.

Understanding the Issue:

Distributed Share: A distributed share in Nutanix Files is accessible via SMB or NFS and spans multiple FSVMs.

Windows Explorer (SMB): The administrator is using Windows Explorer, indicating the share is accessed via SMB.

Cannot Rename Folders: The inability to rename folders suggests a permissions restriction, likely because the user account used to create the folders does not have sufficient permissions to modify them (e.g., rename).

Company Naming Convention: The requirement to rename folders to meet a naming convention implies the administrator needs full control over the folders, which may not be granted by the current permissions.

Analysis of Options:

Option A (Use the Microsoft Shared Folder MMC Snap-in): Incorrect. The Microsoft Shared Folder MMC Snap-in (e.g., via Computer Management) allows management of SMB shares on a Windows server, but Nutanix Files shares are managed through the Files Console or FSVMs, not a Windows server. While this tool can view shares, it does not provide a mechanism to resolve renaming issues caused by permissions on a Nutanix Files share.

Option B (Use the Files MMC Snap-in and rename the folders): Incorrect. There is no “Files MMC Snap-in” for Nutanix Files. Nutanix Files is managed via the Files Console in Prism Central or through CLI/FSVM access. This option appears to be a misnomer and does not provide a valid solution for renaming folders.

Option C (Modify the read/write permissions on the created folders): Correct. The inability to rename folders in an SMB share is typically due to insufficient permissions. When the administrator created the folders via Windows Explorer, the default permissions (inherited from the share or parent folder) may not grant the necessary rights (e.g., “Modify” or “Full Control”) to rename them. The administrator should modify the permissions on the created folders to grant the required rights (e.g., Full Control) to the user account or group, allowing renaming to meet the company naming convention. This can be done via Windows Explorer (Properties > Security tab) or through the Files Console by adjusting share/folder permissions.

Option D (Modify the Files shares to use the NFS protocol): Incorrect. Switching the share to use NFS instead of SMB would require reconfiguring the share and client access, which is unnecessary and disruptive. The issue is with permissions, not the protocol, and SMB supports folder renaming if the correct permissions are set. Additionally, NFS may introduce other complexities (e.g., Unix permissions) that do not address the core issue.

Why Option C?

The inability to rename folders in an SMB share is a permissions issue. Modifying the read/write permissions on the created folders to grant the administrator (or relevant user/group) the necessary rights (e.g., Modify or Full Control) allows renaming, resolving the issue and enabling compliance with the company naming convention. This can be done directly in Windows Explorer or via the Files Console.

Exact Extract from Nutanix Documentation:

From the Nutanix Files Administration Guide (available on the Nutanix Portal):

“If users cannot rename folders in an SMB share on Nutanix Files, this is typically due to insufficient permissions. Modify the read/write permissions on the affected folders to grant the necessary rights (e.g., Modify or Full Control) to the user or group. Permissions can be adjusted via Windows Explorer (Properties > Security) or through the Files Console by editing share or folder permissions.”

An administrator is having difficulty enabling Data Lens for a file server. What is the most likely cause of this issue?

The file server has been cloned.

SSR is enabled on the file server.

The file server is in a Protection Domain.

The file server has blacklisted file types.

Nutanix Data Lens, a service integrated with Nutanix Unified Storage (NUS), provides data governance, analytics, and ransomware protection for Nutanix Files. Enabling Data Lens for a file server involves configuring the service to monitor the file server’s shares. If the administrator is unable to enable Data Lens, there may be a configuration or compatibility issue with the file server.

Analysis of Options:

Option A (The file server has been cloned): Correct. Cloning a file server (e.g., creating a duplicate file server instance via cloning in Nutanix) is not a supported configuration for Data Lens. Data Lens relies on a unique file server identity to manage its metadata and analytics. If the file server has been cloned, Data Lens may fail to enable due to conflicts in identity or metadata, as the cloned file server may not be properly registered or recognized by Data Lens.

Option B (SSR is enabled on the file server): Incorrect. Self-Service Restore (SSR) is a feature in Nutanix Files that allows users to recover previous versions of files in SMB shares. Enabling SSR does not affect the ability to enable Data Lens, as the two features are independent and can coexist on a file server.

Option C (The file server is in a Protection Domain): Incorrect. A Protection Domain in Nutanix is used for disaster recovery (DR) of VMs or Volume Groups, not file servers directly. Nutanix Files uses replication policies (e.g., NearSync) for DR, not Protection Domains. Even if the file server is part of a replication setup, this does not prevent Data Lens from being enabled.

Option D (The file server has blacklisted file types): Incorrect. Blacklisting file types in Nutanix Files prevents certain file extensions from being stored on shares (e.g., for security reasons). However, this feature does not affect the ability to enable Data Lens, which operates at a higher level to analyze file metadata and user activity, regardless of file types.

Why Option A?

Cloning a file server creates a duplicate instance that may not be properly registered with Data Lens, leading to conflicts in identity, metadata, or configuration. Nutanix documentation specifies that Data Lens requires a uniquely deployed file server, and cloning can cause issues when enabling the service, making this the most likely cause of the administrator’s difficulty.

Exact Extract from Nutanix Documentation:

From the Nutanix Data Lens Administration Guide (available on the Nutanix Portal):

“Data Lens requires a uniquely deployed file server to enable its services. Cloning a file server is not supported, as it may result in conflicts with Data Lens metadata and configuration. If a file server has been cloned, Data Lens may fail to enable, and the file server must be redeployed or re-registered to resolve the issue.”

An administrator needs to scale out an existing Files instance. Based on the Company’s requirements, File instance has four FSVMs configured and needs to expand to six.

How many additional Client IP addresses and Storage IP addresses does the administrator require to complete this task?

3 Client IPs and 2 Storage IPs

2 Client IPs and 2 Storage IPs

3 Client IPs and 3 Storage IPs

2 Client IPs and 3 Storage IPs

To scale out an existing Files instance, the administrator needs to add one Client IP and one Storage IP for each additional FSVM. Since the Files instance needs to expand from four FSVMs to six FSVMs, the administrator needs to add two Client IPs and two Storage IPs in total. The Client IPs are used for communication between the FSVMs and the clients, while the Storage IPs are used for communication between the FSVMs and the CVMs. References: Nutanix Files Administration Guide, page 28; Nutanix Files Solution Guide, page 7

How can an administrator deploy a new instance of Files?

From LCM in Prism Central.

From LCM in Prism Element.

From the Storage view in Prism Element.

From the Files Console view in Prism Central.

The Files Console view in Prism Central is the primary interface for deploying and managing Files clusters. Administrators can use the Files Console to create a new instance of Files by providing the required information, such as cluster name, network configuration, storage capacity, and license key. References: Nutanix Files Administration Guide

Deploying a new instance of Nutanix Files is done through the Files Console view in Prism Central, where the administrator can create a new File Server, specify the number of FSVMs, configure networks (Client and Storage), and allocate storage. This is the standard and supported method for Files deployment, providing a centralized interface for managing Files instances.

Exact Extract from Nutanix Documentation:

From the Nutanix Files Deployment Guide (available on the Nutanix Portal):

“To deploy a new instance of Nutanix Files, use the Files Console view in Prism Central. Navigate to the Files Console, select the option to create a new File Server, and configure the settings, including the number of FSVMs, network configuration, and storage allocation.”

An organization currently has a Files cluster for their office data including all department shares. Most of the data is considered cold Data and they are looking to migrate to free up space for future growth or newer data.

The organization has recently added an additional node with more storage. In addition, the organization is using the Public Cloud for .. storage needs.

What will be the best way to achieve this requirement?

Migrate cold data from the Files to tape storage.

Backup the data using a third-party software and replicate to the cloud.

Setup another cluster and replicate the data with Protection Domain.

Enable Smart Tiering in Files within the File Console.

The organization uses a Nutanix Files cluster, part of Nutanix Unified Storage (NUS), for back office data, with most data classified as Cold Data (infrequently accessed). They want to free up space on the Files cluster for future growth or newer data. They have added a new node with more storage to the cluster and are already using the Public Cloud for other storage needs. The goal is to migrate Cold Data to free up space while considering the best approach.

Analysis of Options:

Option A (Set up another cluster and replicate the data with Protection Domain): Incorrect. Setting up another cluster and using a Protection Domain to replicate data is a disaster recovery (DR) strategy, not a solution for migrating Cold Data to free up space. Protection Domains are used to protect and replicate VMs or Volume Groups, not Files shares directly, and this approach would not address the goal of freeing up space on the existing Files cluster—it would simply create a copy on another cluster.

Option B (Enable Smart Tiering in Files within the Files Console): Correct. Nutanix Files supports Smart Tiering, a feature that allows data to be tiered to external storage, such as the Public Cloud (e.g., AWS S3, Azure Blob), based on access patterns. Cold Data (infrequently accessed) can be automatically tiered to the cloud, freeing up space on the Files cluster while keeping the data accessible through the same share. Since the organization is already using the Public Cloud, Smart Tiering aligns perfectly with their infrastructure and requirements.

Option C (Migrate cold data from Files to tape storage): Incorrect. Migrating data to tape storage is a manual and outdated process for archival. Nutanix Files does not have native integration with tape storage, and this approach would require significant manual effort, making it less efficient than Smart Tiering. Additionally, tape storage is not as easily accessible as cloud storage for future retrieval.

Option D (Back up the data using a third-party software and replicate to the cloud): Incorrect. While backing up data with third-party software and replicating it to the cloud is feasible, it is not the best approach for this scenario. This method would create a backup copy rather than freeing up space on the Files cluster, and it requires additional software and management overhead. Smart Tiering is a native feature that achieves the goal more efficiently by moving Cold Data to the cloud while keeping it accessible.

Why Option B?

Smart Tiering in Nutanix Files is designed for exactly this use case: moving Cold Data to a lower-cost storage tier (e.g., Public Cloud) to free up space on the primary cluster while maintaining seamless access to the data. Since the organization is already using the Public Cloud and has added a new node (which increases local capacity but doesn’t address Cold Data directly), Smart Tiering leverages their existing cloud infrastructure to offload Cold Data, freeing up space for future growth or newer data. This can be configured in the Files Console by enabling Smart Tiering and setting policies to tier Cold Data to the cloud.

Exact Extract from Nutanix Documentation:

From the Nutanix Files Administration Guide (available on the Nutanix Portal):

“Smart Tiering in Nutanix Files allows administrators to tier Cold Data to external storage, such as AWS S3 or Azure Blob, to free up space on the primary Files cluster. This feature can be enabled in the Files Console, where policies can be configured to identify and tier infrequently accessed data while keeping it accessible through the same share.”

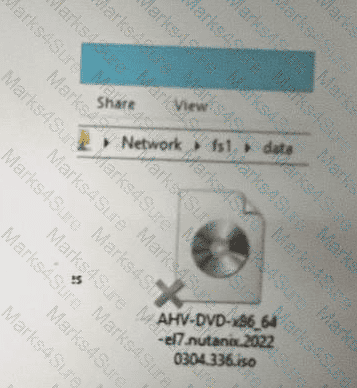

Refer to the exhibit.

What does the ‘’X’’ represent on the icon?

Share Disconnected File

Corrupt ISO

Distributed shared file

Tiered File

The “X” on the icon represents a distributed shared file, which is a file that belongs to a distributed share or export. A distributed share or export is a type of SMB share or NFS export that distributes the hosting of top-level directories across multiple FSVMs. The “X” indicates that the file is not hosted by the current FSVM, but by another FSVM in the cluster. The “X” also helps to identify which files are eligible for migration when using the Nutanix Files Migration Tool. References: Nutanix Files Administration Guide, page 34; Nutanix Files Migration Tool User Guide, page 10

Which two methods can be used to upgrade Files? (Choose two.)

Prism Element - LCM

Prism Element - One-click

Prism Central - LCM

Prism Central - Files Manager

Nutanix Files, part of Nutanix Unified Storage (NUS), can be upgraded to newer versions to gain access to new features, bug fixes, and improvements. Upgrading Files involves updating the File Server Virtual Machines (FSVMs) and can be performed using Nutanix’s management tools.

Analysis of Options:

Option A (Prism Element - LCM): Incorrect. Life Cycle Manager (LCM) in Prism Element is used to manage upgrades for AOS, hypervisors, and other cluster components, but it does not directly handle Nutanix Files upgrades. Files upgrades are managed through Prism Central, as Files is a distributed service that requires centralized management.

Option B (Prism Element - One-click): Incorrect. Prism Element does not have a “one-click” upgrade option for Nutanix Files. One-click upgrades are typically associated with hypervisor upgrades (e.g., ESXi, as in Question 47) or AOS upgrades, not Files. Files upgrades are performed via Prism Central.

Option C (Prism Central - LCM): Correct. Life Cycle Manager (LCM) in Prism Central can be used to upgrade Nutanix Files. LCM in Prism Central manages upgrades for Files by downloading the Files software bundle, distributing it to FSVMs, and performing a rolling upgrade to minimize downtime. This is a supported and recommended method for upgrading Files.

Option D (Prism Central - Files Manager): Correct. The Files Manager (or Files Console) in Prism Central provides a UI for managing Nutanix Files, including upgrades. The administrator can use the Files Manager to initiate an upgrade by uploading a Files software bundle or selecting an available version, and the upgrade process is managed through Prism Central, ensuring a coordinated update across all FSVMs.

Selected Methods:

C: LCM in Prism Central automates the Files upgrade process, making it a streamlined method.

D: The Files Manager in Prism Central provides a manual upgrade option through the UI, offering flexibility for administrators.

Exact Extract from Nutanix Documentation:

From the Nutanix Files Administration Guide (available on the Nutanix Portal):

“Nutanix Files can be upgraded using two methods in Prism Central: Life Cycle Manager (LCM) and the Files Manager. LCM in Prism Central automates the upgrade process by downloading and applying the Files software bundle, while the Files Manager allows administrators to manually initiate the upgrade by uploading a software bundle or selecting an available version.”

An administrator has received an alert AI303551 – VolumeGroupProtectionFailed details of alerts as follows:

Which error logs should the administrator be reviewing to determine why the relative service is down:

solver.log

arithmos.ERROR

The error log that the administrator should review to determine why the relative service is down is arithmos.ERROR. Arithmos is a service that runs on each CVM and provides volume group protection functionality for Volumes. Volume group protection is a feature that allows administrators to create protection policies for volume groups, which define how often snapshots are taken, how long they are retained, and where they are replicated. If arithmos.ERROR log shows any errors or exceptions related to volume group protection, it can indicate that the relative service is down or not functioning properly. References: Nutanix Volumes Administration Guide, page 29; Nutanix Volumes Troubleshooting Guide

An administrator wants to monitor their Files environment for suspicious activities, such mass deletion or access denials.

How can the administrator be alerted to such activities?

How can the administrator be alerted to such activities?

Configure Alerts & Events in the Files Console, filtering for Warning severity.

Deploy the Files Analytics VM. and configure anomaly rules.

Configure Files to use ICAP servers, with monitors for desired activities.

Create a data protection policy in the Files view in Prism Central.

The administrator can monitor their Files environment for suspicious activities, such as mass deletion or access denials, by deploying the File Analytics VM and configuring anomaly rules. File Analytics is a feature that provides insights into the usage and activity of file data stored on Files. File Analytics consists of a File Analytics VM (FAVM) that runs on a Nutanix cluster and communicates with the File Server VMs (FSVMs) that host the file shares. File Analytics can alert the administrator when there is an unusual or suspicious activity on file data, such as mass deletion, encryption, permission change, or access denial. The administrator can configure anomaly rules to define the threshold, time window, and notification settings for each type of anomaly. References: Nutanix Files Administration Guide, page 93; Nutanix File Analytics User Guide

TESTED 18 Aug 2025