Your organization is building a real-time recommendation engine using ML models that process live user activity data stored in BigQuery and Cloud Storage. Each new model developed is saved to Artifact Registry. This new system deploys models to Google Kubernetes Engine and uses Pub/Sub for message queues. Recent industry news has been reporting attacks exploiting ML model supply chains. You need to enhance the security in this serverless architecture, specifically against risks to the development and deployment pipeline. What should you do?

An application running on a Compute Engine instance needs to read data from a Cloud Storage bucket. Your team does not allow Cloud Storage buckets to be globally readable and wants to ensure the principle of least privilege.

Which option meets the requirement of your team?

Your team needs to obtain a unified log view of all development cloud projects in your SIEM. The development projects are under the NONPROD organization folder with the test and pre-production projects. The development projects share the ABC-BILLING billing account with the rest of the organization.

Which logging export strategy should you use to meet the requirements?

Your company is moving to Google Cloud. You plan to sync your users first by using Google Cloud Directory Sync (GCDS). Some employees have already created Google Cloud accounts by using their company email addresses that were created outside of GCDS. You must create your users on Cloud Identity.

What should you do?

Your organization is developing a sophisticated machine learning (ML) model to predict customer behavior for targeted marketing campaigns. The BigQuery dataset used for training includes sensitive personal information. You must design the security controls around the AI/ML pipeline. Data privacy must be maintained throughout the model's lifecycle and you must ensure that personal data is not used in the training process Additionally, you must restrict access to the dataset to an authorized subset of people only. What should you do?

Your organization needs to restrict the types of Google Cloud services that can be deployed within specific folders to enforce compliance requirements. You must apply these restrictions only to the designated folders without affecting other parts of the resource hierarchy. You want to use the most efficient and simple method. What should you do?

Your organization operates in a highly regulated environment and has a stringent set of compliance requirements for protecting customer data. You must encrypt data while in use to meet regulations. What should you do?

You need to set up a Cloud interconnect connection between your company's on-premises data center and VPC host network. You want to make sure that on-premises applications can only access Google APIs over the Cloud Interconnect and not through the public internet. You are required to only use APIs that are supported by VPC Service Controls to mitigate against exfiltration risk to non-supported APIs. How should you configure the network?

Your company has deployed an artificial intelligence model in a central project. This model has a lot of sensitive intellectual property and must be kept strictly isolated from the internet. You must expose the model endpoint only to a defined list of projects in your organization. What should you do?

Your customer has an on-premises Public Key Infrastructure (PKI) with a certificate authority (CA). You need to issue certificates for many HTTP load balancer frontends. The on-premises PKI should be minimally affected due to many manual processes, and the solution needs to scale.

What should you do?

You work at a company in a regulated industry and are responsible for ongoing security of the Cloud environment. You need to prevent and detect misconfigurations in a particular folder based on specific compliance policies. You need to adhere to industry-specific compliance policies and policies that are internal to your company. What should you do?

A company has redundant mail servers in different Google Cloud Platform regions and wants to route customers to the nearest mail server based on location.

How should the company accomplish this?

You are part of a security team investigating a compromised service account key. You need to audit which new resources were created by the service account.

What should you do?

Your company must follow industry specific regulations. Therefore, you need to enforce customer-managed encryption keys (CMEK) for all new Cloud Storage resources in the organization called org1.

What command should you execute?

A customer is collaborating with another company to build an application on Compute Engine. The customer is building the application tier in their GCP Organization, and the other company is building the storage tier in a different GCP Organization. This is a 3-tier web application. Communication between portions of the application must not traverse the public internet by any means.

Which connectivity option should be implemented?

Your organization has had a few recent DDoS attacks. You need to authenticate responses to domain name lookups. Which Google Cloud service should you use?

A security audit uncovered several inconsistencies in your project's Identity and Access Management (IAM) configuration. Some service accounts have overly permissive roles, and a few external collaborators have more access than necessary. You need to gain detailed visibility into changes to IAM policies, user activity, service account behavior, and access to sensitive projects. What should you do?

Your company's users access data in a BigQuery table. You want to ensure they can only access the data during working hours.

What should you do?

Your team sets up a Shared VPC Network where project co-vpc-prod is the host project. Your team has configured the firewall rules, subnets, and VPN gateway on the host project. They need to enable Engineering Group A to attach a Compute Engine instance to only the 10.1.1.0/24 subnet.

What should your team grant to Engineering Group A to meet this requirement?

Your company's storage team manages all product images within a specific Google Cloud project. To maintain control, you must isolate access to Cloud Storage for this project, allowing the storage team to manage restrictions at the project level. They must be restricted to using corporate computers. What should you do?

Your organization has established a highly sensitive project within a VPC Service Controls perimeter. You need to ensure that only users meeting specific contextual requirements—such as having a company-managed device, a specific location, and a valid user identity—can access resources within this perimeter. You want to evaluate the impact of this change without blocking legitimate access. What should you do?

Your organization is moving virtual machines (VMs) to Google Cloud. You must ensure that operating system images that are used across your projects are trusted and meet your security requirements.

What should you do?

A company is deploying their application on Google Cloud Platform. Company policy requires long-term data to be stored using a solution that can automatically replicate data over at least two geographic places.

Which Storage solution are they allowed to use?

A large e-retailer is moving to Google Cloud Platform with its ecommerce website. The company wants to ensure payment information is encrypted between the customer’s browser and GCP when the customers checkout online.

What should they do?

A customer implements Cloud Identity-Aware Proxy for their ERP system hosted on Compute Engine. Their security team wants to add a security layer so that the ERP systems only accept traffic from Cloud Identity- Aware Proxy.

What should the customer do to meet these requirements?

Your organization is using Google Cloud to develop and host its applications. Following Google-recommended practices, the team has created dedicated projects for development and production. Your development team is located in Canada and Germany. The operations team works exclusively from Germany to adhere to local laws. You need to ensure that admin access to Google Cloud APIs is restricted to these countries and environments. What should you do?

A security audit uncovered several inconsistencies in your project’s Identity and Access Management (IAM) configuration. Some service accounts have overly permissive roles, and a few external collaborators have more access than necessary. You need to gain detailed visibility into changes to IAM policies, user activity, service account behavior, and access to sensitive projects. What should you do?

A customer wants to make it convenient for their mobile workforce to access a CRM web interface that is hosted on Google Cloud Platform (GCP). The CRM can only be accessed by someone on the corporate network. The customer wants to make it available over the internet. Your team requires an authentication layer in front of the application that supports two-factor authentication

Which GCP product should the customer implement to meet these requirements?

Your company runs a website that will store PII on Google Cloud Platform. To comply with data privacy regulations, this data can only be stored for a specific amount of time and must be fully deleted after this specific period. Data that has not yet reached the time period should not be deleted. You want to automate the process of complying with this regulation.

What should you do?

Your company is concerned about unauthorized parties gaming access to the Google Cloud environment by using a fake login page. You must implement a solution to protect against person-in-the-middle attacks.

Which security measure should you use?

Your organization wants full control of the keys used to encrypt data at rest in their Google Cloud environments. Keys must be generated and stored outside of Google and integrate with many Google Services including BigQuery.

What should you do?

In a shared security responsibility model for IaaS, which two layers of the stack does the customer share responsibility for? (Choose two.)

Your organization recently activated the Security Command Center {SCO standard tier. There are a few Cloud Storage buckets that were accidentally made accessible to the public. You need to investigate the impact of the incident and remediate it.

What should you do?

Employees at your company use their personal computers to access your organization s Google Cloud console. You need to ensure that users can only access the Google Cloud console from their corporate-issued devices and verify that they have a valid enterprise certificate

What should you do?

You are responsible for the operation of your company's application that runs on Google Cloud. The database for the application will be maintained by an external partner. You need to give the partner team access to the database. This access must be restricted solely to the database and cannot extend to any other resources within your company's network. Your solution should follow Google-recommended practices. What should you do?

A business unit at a multinational corporation signs up for GCP and starts moving workloads into GCP. The business unit creates a Cloud Identity domain with an organizational resource that has hundreds of projects.

Your team becomes aware of this and wants to take over managing permissions and auditing the domain resources.

Which type of access should your team grant to meet this requirement?

You are implementing data protection by design and in accordance with GDPR requirements. As part of design reviews, you are told that you need to manage the encryption key for a solution that includes workloads for Compute Engine, Google Kubernetes Engine, Cloud Storage, BigQuery, and Pub/Sub. Which option should you choose for this implementation?

In order to meet PCI DSS requirements, a customer wants to ensure that all outbound traffic is authorized.

Which two cloud offerings meet this requirement without additional compensating controls? (Choose two.)

Your company is deploying a new application on GKE. The application handles sensitive customer data and is subject to strict data residency requirements. You need to ensure that the data is stored only within the europe-west4 region. What should you do?

You are designing a new governance model for your organization's secrets that are stored in Secret Manager. Currently, secrets for Production and Non-Production applications are stored and accessed using service accounts. Your proposed solution must:

Provide granular access to secrets

Give you control over the rotation schedules for the encryption keys that wrap your secrets

Maintain environment separation

Provide ease of management

Which approach should you take?

Your organization strives to be a market leader in software innovation. You provided a large number of Google Cloud environments so developers can test the integration of Gemini in Vertex AI into their existing applications or create new projects. Your organization has 200 developers and a five-person security team. You must prevent and detect proper security policies across the Google Cloud environments. What should you do? (Choose 2 answers)

You need to centralize your team’s logs for production projects. You want your team to be able to search and analyze the logs using Logs Explorer. What should you do?

Your company’s chief information security officer (CISO) is requiring business data to be stored in specific locations due to regulatory requirements that affect the company’s global expansion plans. After working on a plan to implement this requirement, you determine the following:

The services in scope are included in the Google Cloud data residency requirements.

The business data remains within specific locations under the same organization.

The folder structure can contain multiple data residency locations.

The projects are aligned to specific locations.

You plan to use the Resource Location Restriction organization policy constraint with very granular control. At which level in the hierarchy should you set the constraint?

Your organization is rolling out a new continuous integration and delivery (CI/CD) process to deploy infrastructure and applications in Google Cloud Many teams will use their own instances of the CI/CD workflow It will run on Google Kubernetes Engine (GKE) The CI/CD pipelines must be designed to securely access Google Cloud APIs

What should you do?

Your organization previously stored files in Cloud Storage by using Google Managed Encryption Keys (GMEK). but has recently updated the internal policy to require Customer Managed Encryption Keys (CMEK). You need to re-encrypt the files quickly and efficiently with minimal cost.

What should you do?

A company’s application is deployed with a user-managed Service Account key. You want to use Google- recommended practices to rotate the key.

What should you do?

Your organization must follow the Payment Card Industry Data Security Standard (PCI DSS). To prepare for an audit, you must detect deviations at an infrastructure-as-a-service level in your Google Cloud landing zone. What should you do?

You plan to use a Google Cloud Armor policy to prevent common attacks such as cross-site scripting (XSS) and SQL injection (SQLi) from reaching your web application's backend. What are two requirements for using Google Cloud Armor security policies? (Choose two.)

All logs in your organization are aggregated into a centralized Google Cloud logging project for analysis and long-term retention.4 While most of the log data can be viewed by operations teams, there are specific sensitive fields (i.e., protoPayload.authenticationinfo.principalEmail) that contain identifiable information that should be restricted only to security teams. You need to implement a solution that allows different teams to view their respective application logs in the centralized logging project. It must also restrict access to specific sensitive fields within those logs to only a designated security group. Your solution must ensure that other fields in the same log entry remain visible to other authorized groups. What should you do?

You are implementing communications restrictions for specific services in your Google Cloud organization. Your data analytics team works in a dedicated folder You need to ensure that access to BigQuery is controlled for that folder and its projects. The data analytics team must be able to control the restrictions only at the folder level What should you do?

Your organization is using Google Workspace. Google Cloud, and a third-party SIEM. You need to export events such as user logins, successful logins, and failed logins to the SIEM. Logs need to be ingested in real time or near real-time. What should you do?

You are migrating an on-premises data warehouse to BigQuery Cloud SQL, and Cloud Storage. You need to configure security services in the data warehouse. Your company compliance policies mandate that the data warehouse must:

• Protect data at rest with full lifecycle management on cryptographic keys

• Implement a separate key management provider from data management

• Provide visibility into all encryption key requests

What services should be included in the data warehouse implementation?

Choose 2 answers

An organization is starting to move its infrastructure from its on-premises environment to Google Cloud Platform (GCP). The first step the organization wants to take is to migrate its current data backup and disaster recovery solutions to GCP for later analysis. The organization’s production environment will remain on- premises for an indefinite time. The organization wants a scalable and cost-efficient solution.

Which GCP solution should the organization use?

Your security team wants to implement a defense-in-depth approach to protect sensitive data stored in a Cloud Storage bucket. Your team has the following requirements:

The Cloud Storage bucket in Project A can only be readable from Project B.

The Cloud Storage bucket in Project A cannot be accessed from outside the network.

Data in the Cloud Storage bucket cannot be copied to an external Cloud Storage bucket.

What should the security team do?

An organization adopts Google Cloud Platform (GCP) for application hosting services and needs guidance on setting up password requirements for their Cloud Identity account. The organization has a password policy requirement that corporate employee passwords must have a minimum number of characters.

Which Cloud Identity password guidelines can the organization use to inform their new requirements?

Your organization has hired a small, temporary partner team for 18 months. The temporary team will work alongside your DevOps team to develop your organization's application that is hosted on Google Cloud. You must give the temporary partner team access to your application's resources on Google Cloud and ensure that partner employees lose access if they are removed from their employer's organization. What should you do?

Your organization uses Google Workspace as the primary identity provider for Google Cloud Users in your organization initially created their passwords. You need to improve password security due to a recent security event. What should you do?

Your organization relies heavily on virtual machines (VMs) in Compute Engine. Due to team growth and resource demands. VM sprawl is becoming problematic. Maintaining consistent security hardening and timely package updates poses an increasing challenge. You need to centralize VM image management and automate the enforcement of security baselines throughout the virtual machine lifecycle. What should you do?

You are setting up a CI/CD pipeline to deploy containerized applications to your production clusters on Google Kubernetes Engine (GKE). You need to prevent containers with known vulnerabilities from being deployed. You have the following requirements for your solution:

Must be cloud-native

Must be cost-efficient

Minimize operational overhead

How should you accomplish this? (Choose two.)

Your organization is transitioning to Google Cloud You want to ensure that only trusted container images are deployed on Google Kubernetes Engine (GKE) clusters in a project. The containers must be deployed from a centrally managed. Container Registry and signed by a trusted authority.

What should you do?

Choose 2 answers

Applications often require access to “secrets” - small pieces of sensitive data at build or run time. The administrator managing these secrets on GCP wants to keep a track of “who did what, where, and when?” within their GCP projects.

Which two log streams would provide the information that the administrator is looking for? (Choose two.)

You are using Security Command Center (SCC) to protect your workloads and receive alerts for suspected security breaches at your company. You need to detect cryptocurrency mining software. Which SCC service should you use?

Your financial services company has an audit requirement under a strict regulatory framework that requires comprehensive, immutable audit trails for all administrative and data access activity that ensures that data is kept for seven years. Your current logging is fragmented across individual projects. You need to establish a centralized, tamper-proof, long-term logging solution accessible for audits. What should you do?

Your team wants to limit users with administrative privileges at the organization level.

Which two roles should your team restrict? (Choose two.)

Your Google Cloud organization allows for administrative capabilities to be distributed to each team through provision of a Google Cloud project with Owner role (roles/ owner). The organization contains thousands of Google Cloud Projects Security Command Center Premium has surfaced multiple cpen_myscl_port findings. You are enforcing the guardrails and need to prevent these types of common misconfigurations.

What should you do?

You manage a mission-critical workload for your organization, which is in a highly regulated industry The workload uses Compute Engine VMs to analyze and process the sensitive data after it is uploaded to Cloud Storage from the endpomt computers. Your compliance team has detected that this workload does not meet the data protection requirements for sensitive data. You need to meet these requirements;

• Manage the data encryption key (DEK) outside the Google Cloud boundary.

• Maintain full control of encryption keys through a third-party provider.

• Encrypt the sensitive data before uploading it to Cloud Storage

• Decrypt the sensitive data during processing in the Compute Engine VMs

• Encrypt the sensitive data in memory while in use in the Compute Engine VMs

What should you do?

Choose 2 answers

A manager wants to start retaining security event logs for 2 years while minimizing costs. You write a filter to select the appropriate log entries.

Where should you export the logs?

Your company is using GSuite and has developed an application meant for internal usage on Google App Engine. You need to make sure that an external user cannot gain access to the application even when an employee’s password has been compromised.

What should you do?

A website design company recently migrated all customer sites to App Engine. Some sites are still in progress and should only be visible to customers and company employees from any location.

Which solution will restrict access to the in-progress sites?

Your financial services company is migrating its operations to Google Cloud. You are implementing a centralized logging strategy to meet strict regulatory compliance requirements. Your company's Google Cloud organization has a dedicated folder for all production projects. All audit logs, including Data Access logs from all current and future projects within this production folder, must be securely collected and stored in a central BigQuery dataset for long-term retention and analysis. To prevent duplicate log storage and to enforce centralized control, you need to implement a logging solution that intercepts and overrides any project-level log sinks for these audit logs, to ensure that logs are not inadvertently routed elsewhere. What should you do?

You are working with protected health information (PHI) for an electronic health record system. The privacy officer is concerned that sensitive data is stored in the analytics system. You are tasked with anonymizing the sensitive data in a way that is not reversible. Also, the anonymized data should not preserve the character set and length. Which Google Cloud solution should you use?

You are working with a network engineer at your company who is extending a large BigQuery-based data analytics application. Currently, all of the data for that application is ingested from on-premises applications over a Dedicated Interconnect connection with a 20Gbps capacity. You need to onboard a data source on Microsoft Azure that requires a daily ingestion of approximately 250 TB of data. You need to ensure that the data gets transferred securely and efficiently. What should you do?

You manage one of your organization's Google Cloud projects (Project A). AVPC Service Control (SC) perimeter is blocking API access requests to this project including Pub/Sub. A resource running under a service account in another project (Project B) needs to collect messages from a Pub/Sub topic in your project Project B is not included in a VPC SC perimeter. You need to provide access from Project B to the Pub/Sub topic in Project A using the principle of least

Privilege.

What should you do?

Your organization wants to publish yearly reports of your website usage analytics. You must ensure that no data with personally identifiable information (PII) is published by using the Cloud Data Loss Prevention (Cloud DLP) API. Data integrity must be preserved. What should you do?

Your company is developing a new application for your organization. The application consists of two Cloud Run services, service A and service B. Service A provides a web-based user front-end. Service B provides back-end services that are called by service A. You need to set up identity and access management for the application. Your solution should follow the principle of least privilege. What should you do?

Your organization is migrating its primary web application from on-premises to Google Kubernetes Engine (GKE). You must advise the development team on how to grant their applications access to Google Cloud services from within GKE according to security recommended practices. What should you do?

Your financial services company needs to process customer personally identifiable information (PII) for analytics while adhering to strict privacy regulations. You must transform this data to protect individual privacy to ensure that the data retains its original format and consistency for analytical integrity. Your solution must avoid full irreversible deletion. What should you do?

Your organization has Google Cloud applications that require access to external web services. You must monitor, control, and log access to these services. What should you do?

You plan to deploy your cloud infrastructure using a CI/CD cluster hosted on Compute Engine. You want to minimize the risk of its credentials being stolen by a third party. What should you do?

Your organization must comply with the regulation to keep instance logging data within Europe. Your workloads will be hosted in the Netherlands in region europe-west4 in a new project. You must configure Cloud Logging to keep your data in the country.

What should you do?

Your organization has established a highly sensitive project within a VPC Service Controls perimeter. You need to ensure that only users meeting specific contextual requirements such as having a company-managed device, a specific location, and a valid user identity can access resources within this perimeter. You want to evaluate the impact of this change without blocking legitimate access. What should you do?

Your team needs to prevent users from creating projects in the organization. Only the DevOps team should be allowed to create projects on behalf of the requester.

Which two tasks should your team perform to handle this request? (Choose two.)

You work for a large organization that runs many custom training jobs on Vertex AI. A recent compliance audit identified a security concern. All jobs currently use the Vertex AI service agent. The audit mandates that each training job must be isolated, with access only to the required Cloud Storage buckets, following the principle of least privilege. You need to design a secure, scalable solution to enforce this requirement. What should you do?

You are consulting with a client that requires end-to-end encryption of application data (including data in transit, data in use, and data at rest) within Google Cloud. Which options should you utilize to accomplish this? (Choose two.)

Your organization wants to be continuously evaluated against CIS Google Cloud Computing Foundations Benchmark v1 3 0 (CIS Google Cloud Foundation 1 3). Some of the controls are irrelevant to your organization and must be disregarded in evaluation. You need to create an automated system or process to ensure that only the relevant controls are evaluated.

What should you do?

A customer deploys an application to App Engine and needs to check for Open Web Application Security Project (OWASP) vulnerabilities.

Which service should be used to accomplish this?

You need to enable VPC Service Controls and allow changes to perimeters in existing environments without preventing access to resources. Which VPC Service Controls mode should you use?

You have just created a new log bucket to replace the _Default log bucket. You want to route all log entries that are currently routed to the _Default log bucket to this new log bucket in the most efficient manner. What should you do?

You are a member of the security team at an organization. Your team has a single GCP project with credit card payment processing systems alongside web applications and data processing systems. You want to reduce the scope of systems subject to PCI audit standards.

What should you do?

Your company conducts clinical trials and needs to analyze the results of a recent study that are stored in BigQuery. The interval when the medicine was taken contains start and stop dates The interval data is critical to the analysis, but specific dates may identify a particular batch and introduce bias You need to obfuscate the start and end dates for each row and preserve the interval data.

What should you do?

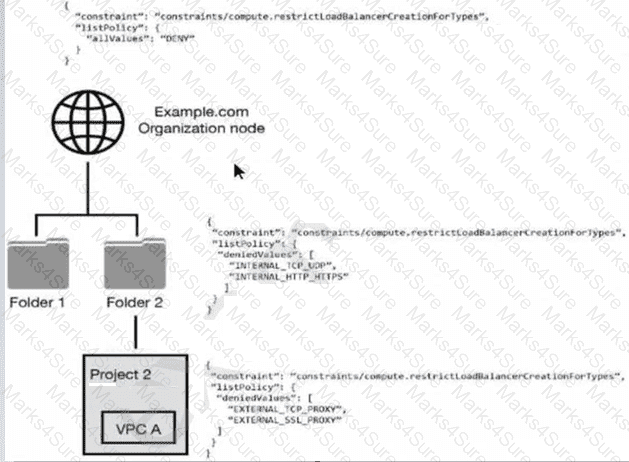

You have the following resource hierarchy. There is an organization policy at each node in the hierarchy as shown. Which load balancer types are denied in VPC A?